国产服务器测试报告

一、主要测试内容与目的

主要测试内容如下,通过部署 GIS 相关软件,搭建常用开发架构、常见开发中间件、数据库及 GIS 插件 postgis,并进行实际项目部署测试。以确定信创环境下的国产服务器是否能够稳定搭建生产项目部署环境,并能得到优质的响应结果。

- GeoServer 适配与压测

- 两类主流国产数据库(人大金仓、翰高)及其 GIS 插件适配性测试

- 程序适配与压测

- 程序中间件(Nginx、Redis)适配性测试

二、测试环境

环境总览

| 对象 | CPU | 内存 | 系统盘 | 数据盘 | 云商 | 带宽 |

|---|---|---|---|---|---|---|

| 数量 | 8 | 32 | 40 | 500 | 电信信创云 | 1.5G/s |

操作系统

银河麒麟 V10SP1

NAME="Kylin Linux Advanced Server"

VERSION="V10 (Tercel)"

ID="kylin"

VERSION_ID="V10"

PRETTY_NAME="Kylin Linux Advanced Server V10 (Tercel)"

ANSI_COLOR="0;31"

Linux version 4.19.90-23.8.v2101.ky10.x86_64 (KYLINSOFT@localhost.localdomain) (gcc version 7.3.0 (GCC)) #1

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 48 bits physical, 48 bits virtual

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 8

Socket(s): 1

NUMA node(s): 1

Vendor ID: AuthenticAMD

CPU family: 24

Model: 1

Model name: Hygon C86 7280 32-core Processor

Stepping: 1

CPU MHz: 1999.999

BogoMIPS: 3999.99

Virtualization: AMD-V

Hypervisor vendor: KVM

Virtualization type: full

NUMA node0 CPU(s): 0-7

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Full AMD retpoline, STIBP disabled, RSB fillingCPU

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 48 bits physical, 48 bits virtual

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 8

Socket(s): 1

NUMA node(s): 1

Vendor ID: AuthenticAMD

CPU family: 24

Model: 1

Model name: Hygon C86 7280 32-core Processor

Stepping: 1

CPU MHz: 1999.999

BogoMIPS: 3999.99

Virtualization: AMD-V

Hypervisor vendor: KVM

Virtualization type: full

NUMA node0 CPU(s): 0-7

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Full AMD retpoline, STIBP disabled, RSB filling

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 h

t syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_ap

icid tsc_known_freq pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt tsc_dead

line_timer xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm cr8_legacy abm sse4a misalignsse

3dnowprefetch osvw topoext perfctr_core cpb vmmcall fsgsbase tsc_adjust bmi1 avx2 smep bmi2 rdseed

adx smap xsaveopt xsavec xgetbv1 arat npt nrip_save内存

total used free shared buff/cache available

Mem: 30Gi 228Mi 3.7Gi 12Gi 26Gi 17Gi

Swap: 0B 0B 0B磁盘

Filesystem Size Used Avail Use% Mounted on

devtmpfs 16G 0 16G 0% /dev

tmpfs 16G 0 16G 0% /dev/shm

tmpfs 16G 25M 16G 1% /run

tmpfs 16G 0 16G 0% /sys/fs/cgroup

/dev/vda1 40G 17G 21G 45% /

tmpfs 16G 0 16G 0% /tmp

tmpfs 3.1G 0 3.1G 0% /run/user/0

tmpfs 12G 12G 0 100% /tmp/memory三、详细报告

GeoServer 适配与压测

测试基础:

资源消耗限额

- 最大渲染内存 (KB):65536

- 最大渲染时间(秒):600

- 最大渲染错误(个数):1000

相关依赖:

JDK: jdk1.8.0_381

Tomcat: apache-tomcat-9.0.84

GeoServer:geoserver-2.22.5

测试内容一:程序安装与国产数据库适配

程序有对应安装版本,通过 Tomcat 可实现快速安装部署,可连接国产人大金仓数据库进行服务发布。

测试内容二:地图服务发布预览

测试数据:

10364441 条图斑,千万级地图数据

基本操作测试

| 测试项 | 测试结果 |

|---|---|

| GoeServer 管理界面加载 | 正常 |

| 工作空间管理功能 | 正常 |

| 存储仓库管理功能 | 正常 |

| 图层管理功能 | 正常 |

| 图层预览 | 正常 |

数据浏览加载测试

测试目的与方式:测试相同数据源下,不同 BBOX 下数据加载结果,数据量为结果 PNG 大小,响应时间为开始请求到得到出图结果。测试方式为每种 BBOX 下,测试相同 6 次得到平均结果。

测试参数

| NAME | VALUE |

|---|---|

| SERVICE | WMS |

| VERSION | 1.1.1 |

| REQUEST | GetMap |

| FORMAT | image/png |

| TRANSPARENT | true |

| LAYERS | testSpace:milliondata |

| exceptions | application/vnd.ogc.se_inimage 复制 |

| SRS | EPSG:4490 |

| WIDTH | 768 |

| HEIGHT | 408 |

| BBOX | 115.191650390625,26.707763671875,123.629150390625,31.190185546875 |

测试结果

| 测试项 BBOX | 平均响应时间(s) | 平均相应数据量(k) |

|---|---|---|

| 115.191650390625,26.707763671875, 123.629150390625,31.190185546875 | 3.3 分钟 | 43.4 |

| 117.3394775390625,27.9656982421875, 121.5582275390625,30.2069091796875 | 1.3 分钟 | 142 |

| 118.42987060546875,28.583679199218 75,120.53924560546875,29.70428466796875 | 35.86 | 222 |

| 118.95721435546875,28.81988525390625, 120.01190185546875,29.38018798828125 | 18.34 | 213 |

| 119.24560546875,28.950347900390625, 119.77294921875,29.230499267578125 | 12.86 | 153 |

| 119.3994140625,28.98914337158203, 119.6630859375,29.12921905517578 | 5.07 | 89.8 |

测试内容三:服务压测

千万地图数据 1 级范围加载

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 1 级范围加载接口,模拟 10 用户并发时,发送给服务器的请求数量为 80836,平均响应时间为 39ms,本次测试中的异常为 0.02%,吞吐量(每秒完成的请求数)为 239.8/秒,每秒从服务器接收的数据量为 3656.35,每秒从服务器发送的数据量为 84.75。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

50 并发

本次采用递增模式压测,并发为 50,项目日志开启 info 状态。

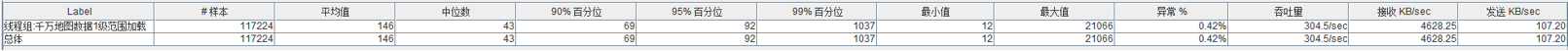

聚合报告

每秒的响应分布图(TPS)

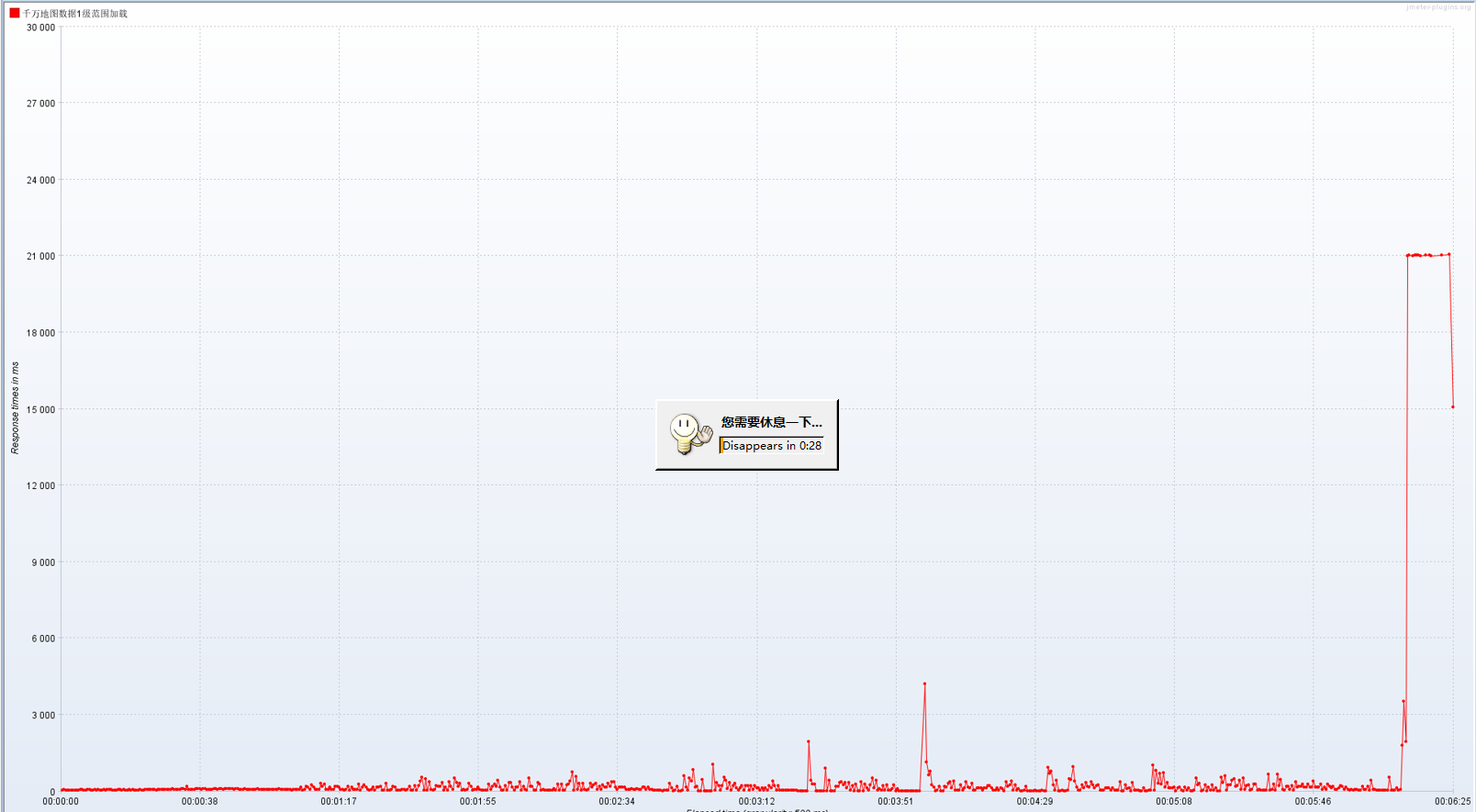

响应时间分布图(RT)

千万地图数据 1 级范围加载接口,模拟 50 用户并发时,发送给服务器的请求数量为 117224,平均响应时间为 146ms,本次测试中的异常为 0.42%,吞吐量(每秒完成的请求数)为 304.5/秒,每秒从服务器接收的数据量为 4628.25,每秒从服务器发送的数据量为 107.20。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

100 并发

本次采用递增模式压测,并发数为 100,项目日志开启 info 状态。

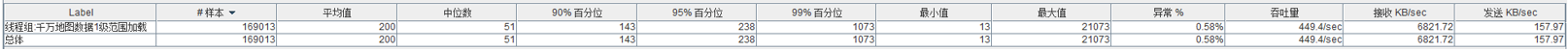

聚合报告

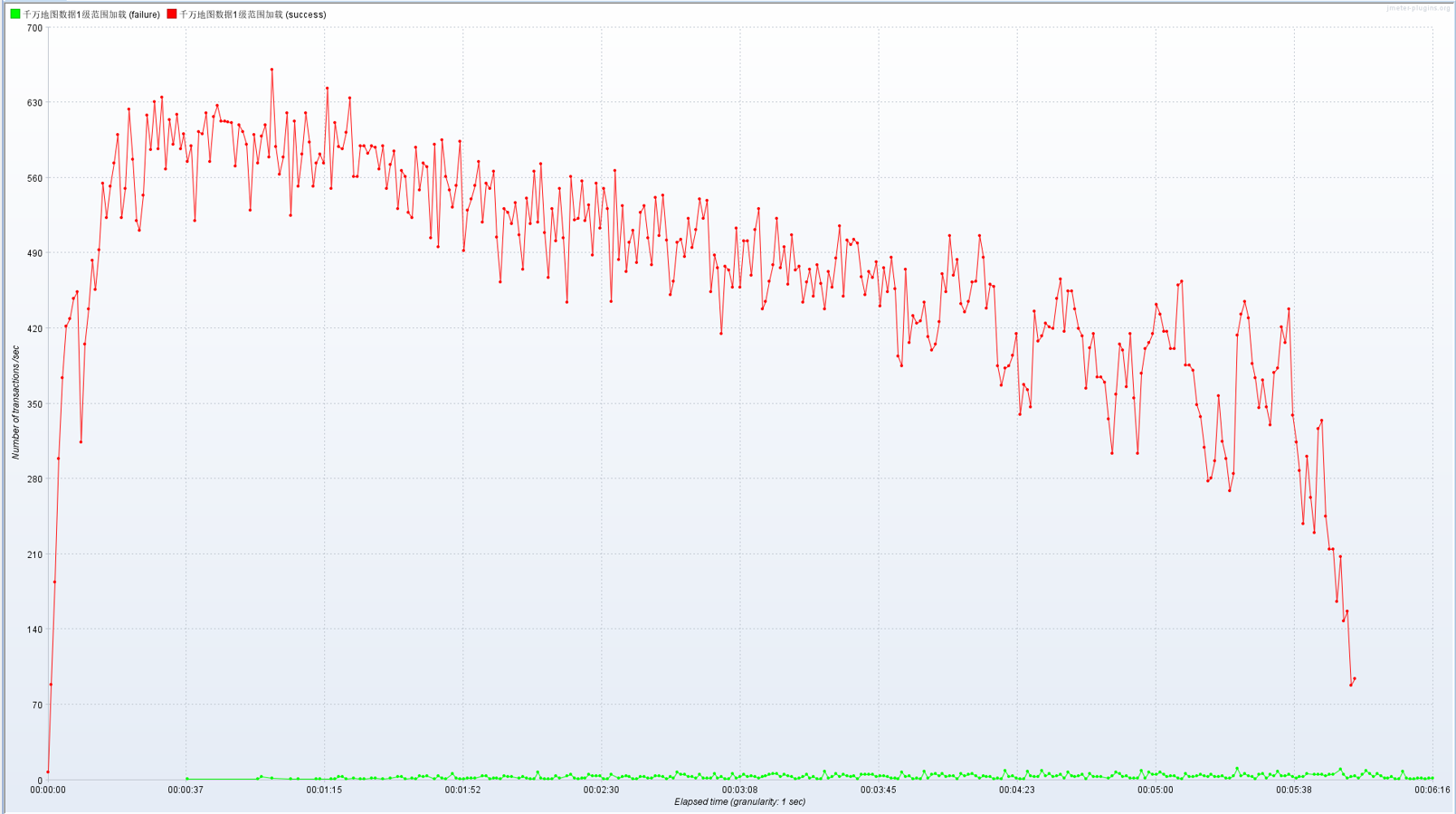

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 1 s级范围加载接口,模拟 100 用户并发时,发送给服务器的请求数量为 169013,平均响应时间为 200ms,本次测试中的异常为 0.58%,吞吐量(每秒完成的请求数)为 449.4/秒,每秒从服务器接收的数据量为 6821.72,每秒从服务器发送的数据量为 157.97。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

千万地图数据 2 级范围加载

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 2 级范围加载接口,模拟 10 用户并发时,发送给服务器的请求数量为 79676,平均响应时间为 39ms,本次测试中的异常为 0.02%,吞吐量(每秒完成的请求数)为 238.4/秒,每秒从服务器接收的数据量为 3634.82,每秒从服务器发送的数据量为 83.35。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

50 并发

本次采用递增模式压测,并发为 50,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 2 级范围加载接口,模拟 50 用户并发时,发送给服务器的请求数量为 121321,平均响应时间为 139ms,本次测试中的异常为 0. 35%,吞吐量(每秒完成的请求数)为 316.0/秒,每秒从服务器接收的数据量为 4803.52,每秒从服务器发送的数据量为 110.08。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

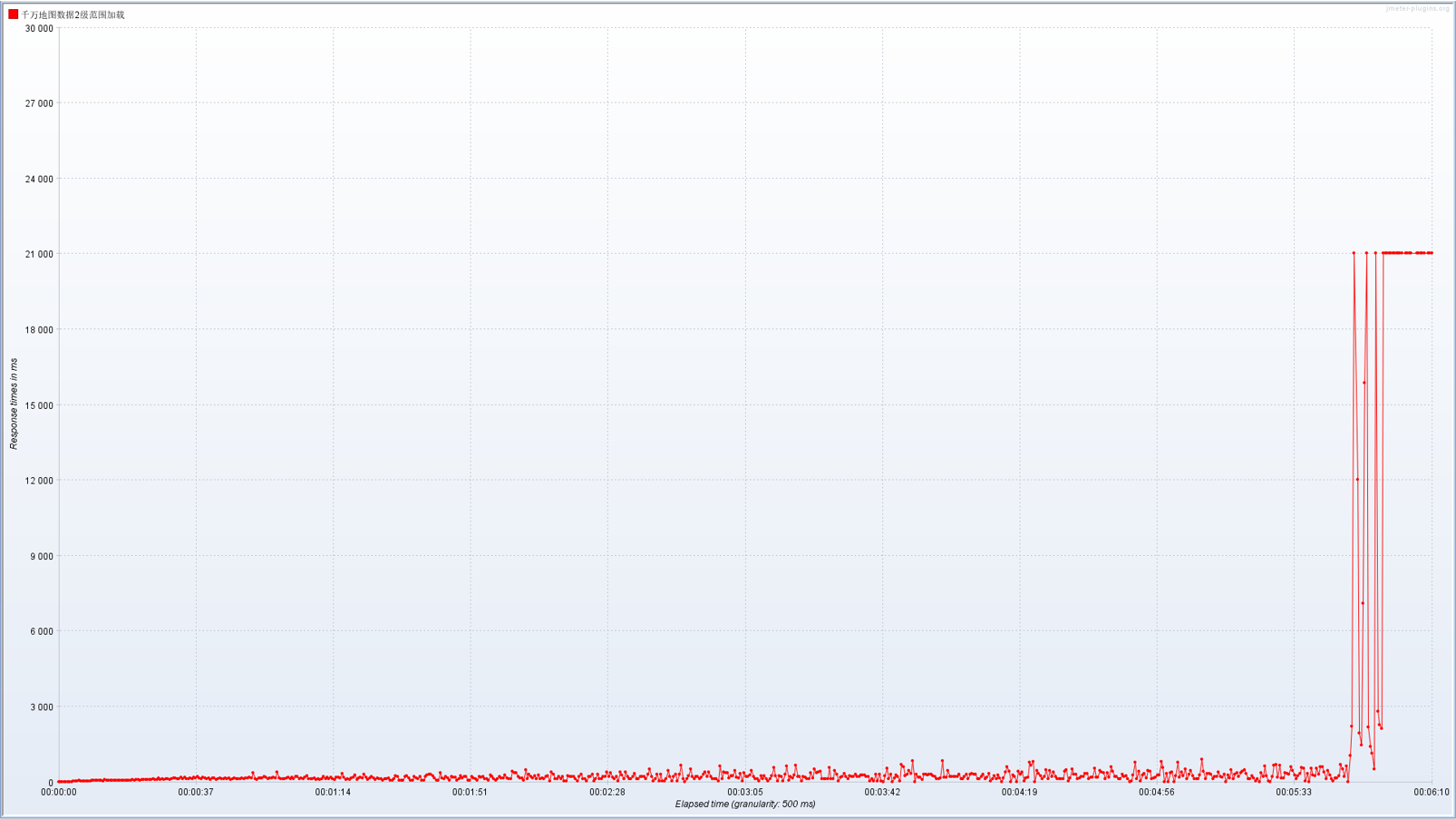

100 并发

本次采用递增模式压测,并发为 100,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 2 级范围加载接口,模拟 100 用户并发时,发送给服务器的请求数量为 154535,平均响应时间为 218ms,本次测试中的异常为 0.72%,吞吐量(每秒完成的请求数)为 417.5/秒,每秒从服务器接收的数据量为 6326.96,每秒从服务器发送的数据量为 144.90。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

千万地图数据 3 级范围加载

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 3 级范围加载接口,模拟 10 用户并发时,发送给服务器的请求数量为 78234,平均响应时间为 40ms,本次测试中的异常为 0.01%,吞吐量(每秒完成的请求数)为 238.5/秒,每秒从服务器接收的数据量为 3637.47,每秒从服务器发送的数据量为 84.31。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

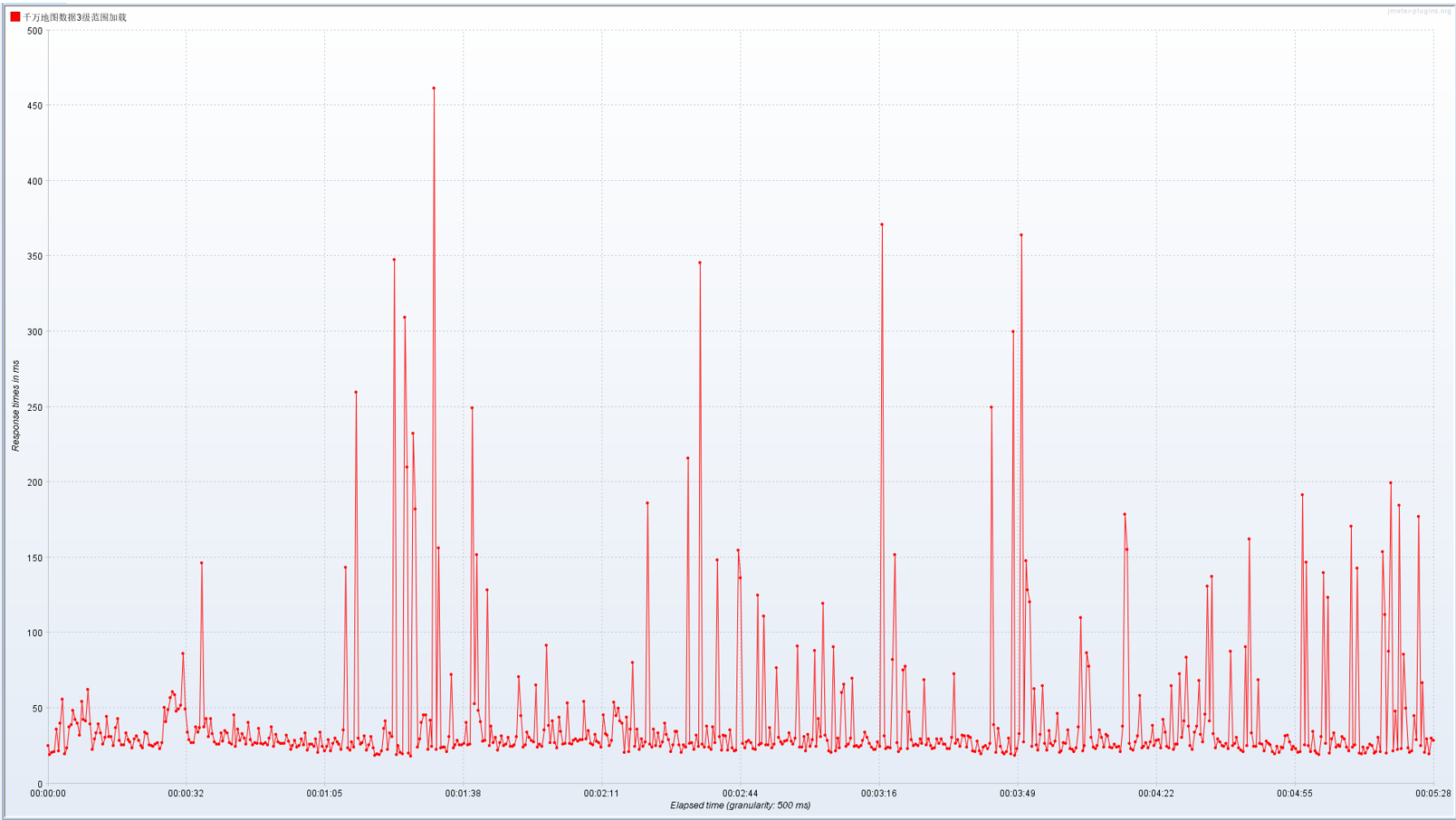

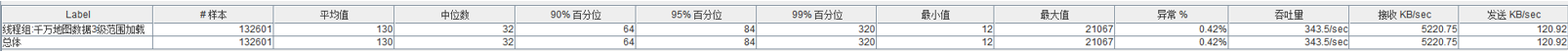

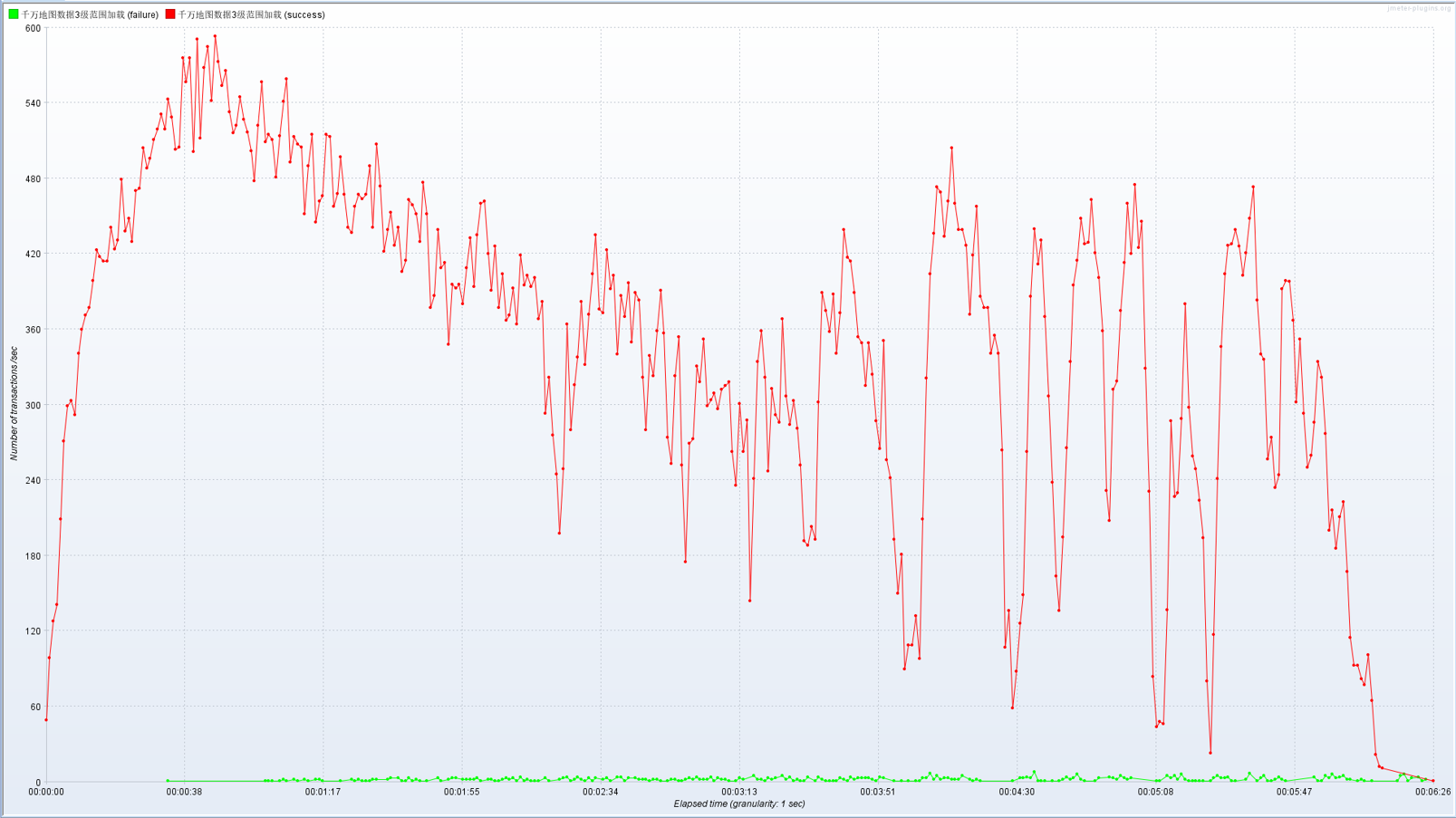

50 并发

本次采用递增模式压测,并发为 50,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 3 级范围加载接口,模拟 50 用户并发时,发送给服务器的请求数量为 132601,平均响应时间为 130ms,本次测试中的异常为 0.42%,吞吐量(每秒完成的请求数)为 343.5/秒,每秒从服务器接收的数据量为 5220.75,每秒从服务器发送的数据量为 120.92。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

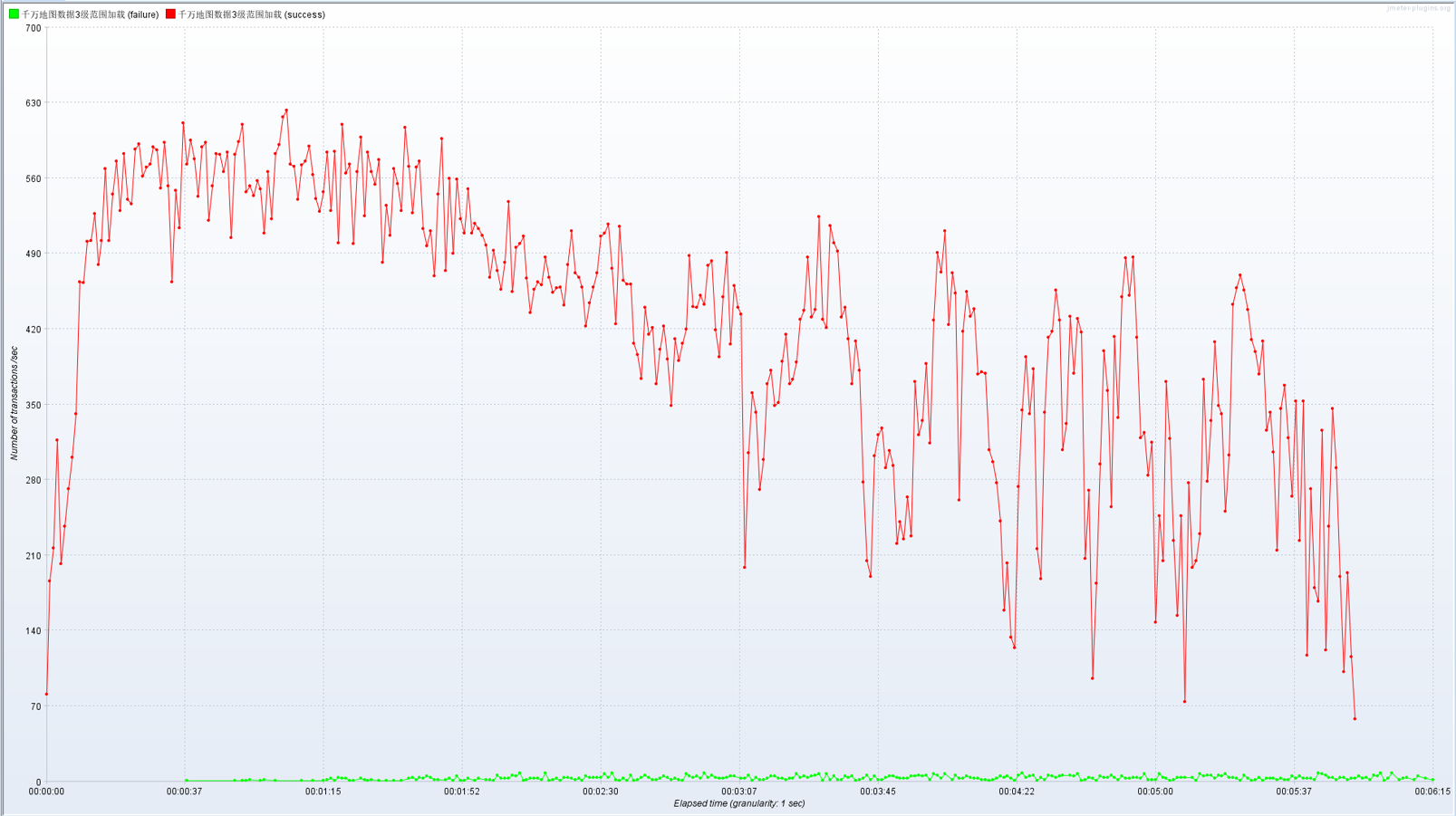

100 并发

本次采用递增模式压测,并发为 100,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 3 级范围加载接口,模拟 100 用户并发时,发送给服务器的请求数量为 150069,平均响应时间为 225ms,本次测试中的异常为 0.69%,吞吐量(每秒完成的请求数)为 399.5/秒,每秒从服务器接收的数据量为 6058.45,每秒从服务器发送的数据量为 140.26。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

千万地图数据 4 级范围加载

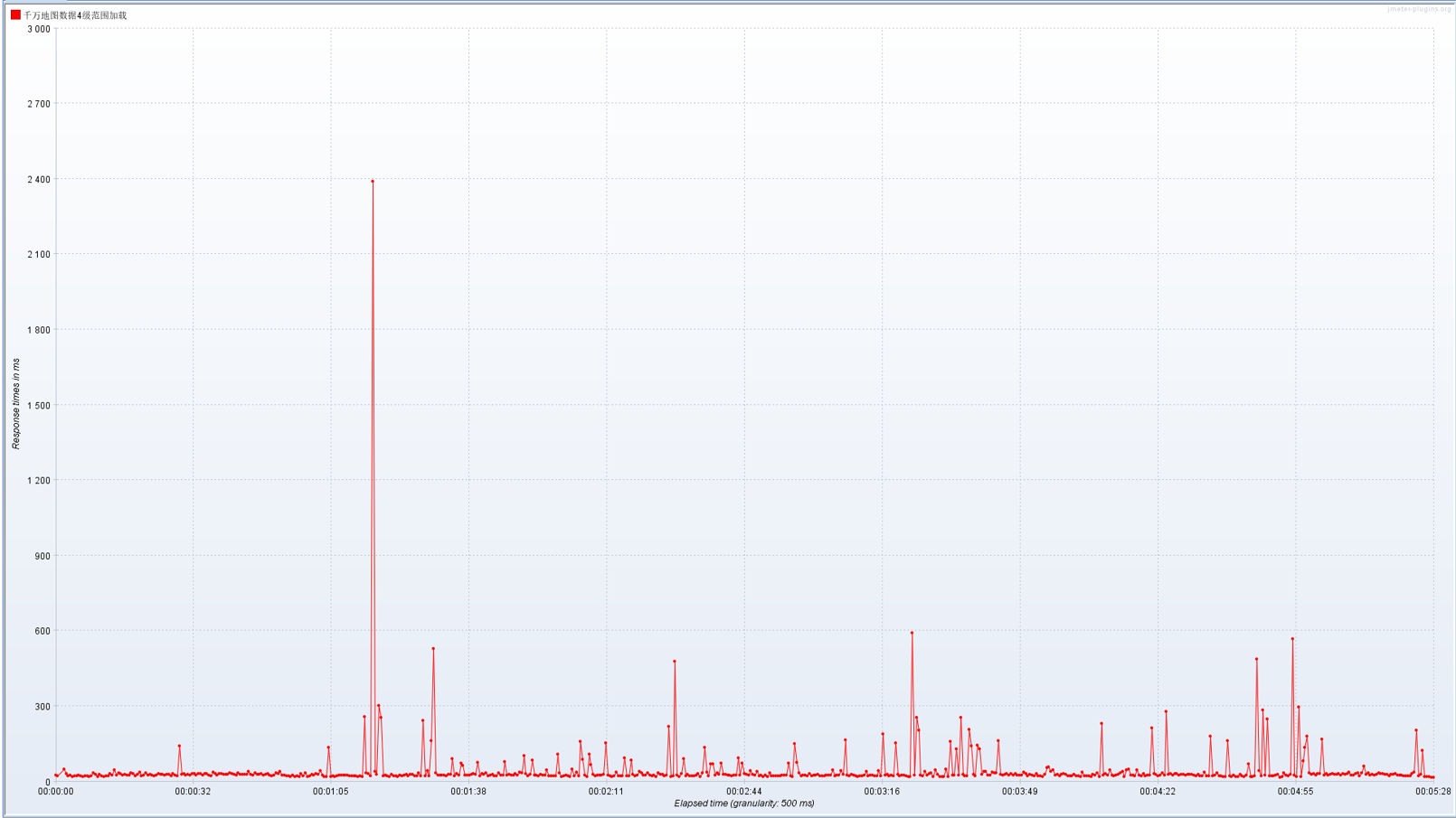

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 4 级范围加载接口,模拟 10 用户并发时,发送给服务器的请求数量为 80100,平均响应时间为 39ms,本次测试中的异常为 0.02%,吞吐量(每秒完成的请求数)为 244.2/秒,每秒从服务器接收的数据量为 3723.94,每秒从服务器发送的数据量为 86.31。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

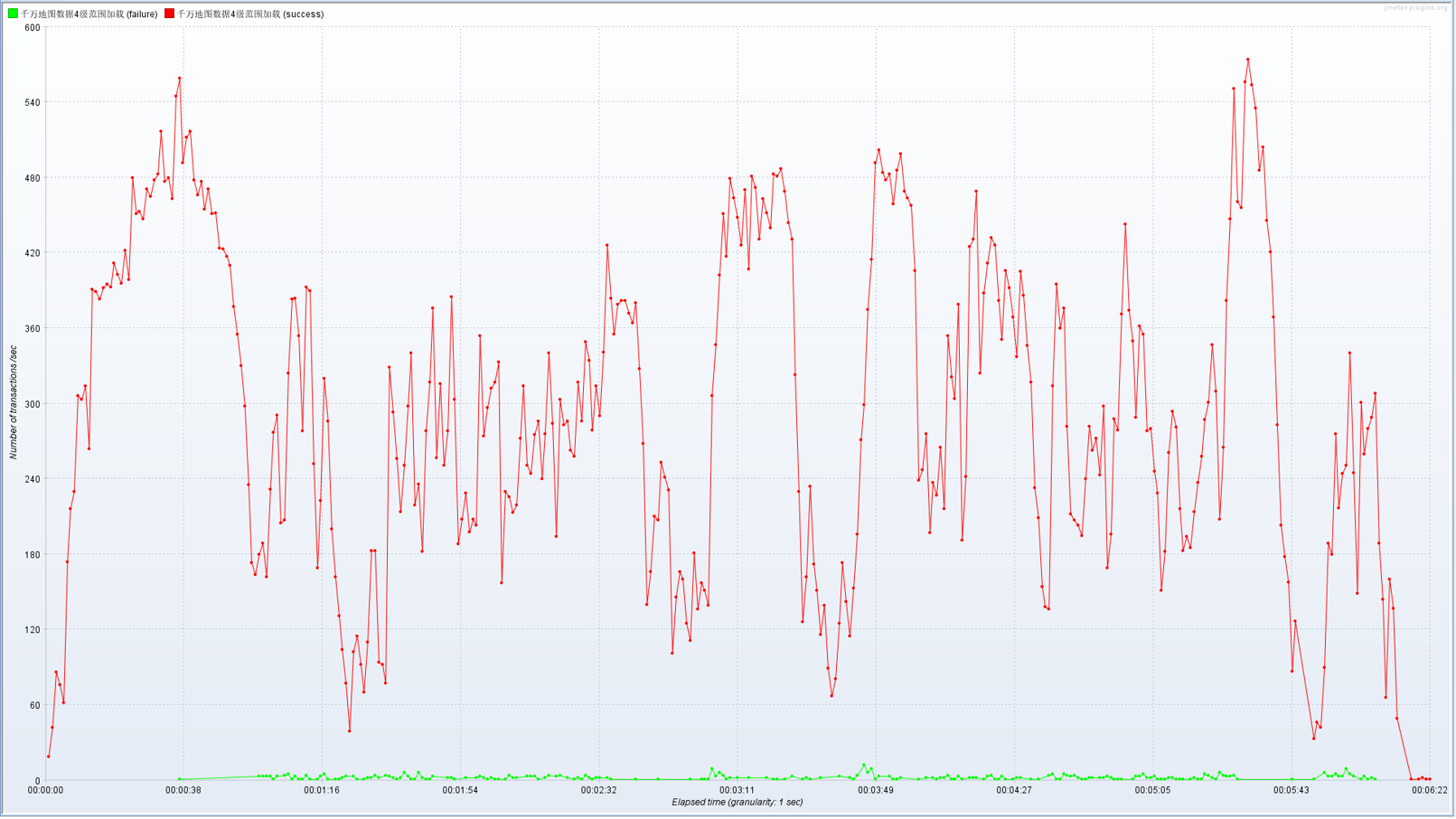

50 并发

本次采用递增模式压测,并发数为 50,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 4 级范围加载接口,模拟 50 用户并发时,发送给服务器的请求数量为 109793,平均响应时间为 154ms,本次测试中的异常为 0.45%,吞吐量(每秒完成的请求数)为 288.0/秒,每秒从服务器接收的数据量为 4376.93,每秒从服务器发送的数据量为 101.37。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

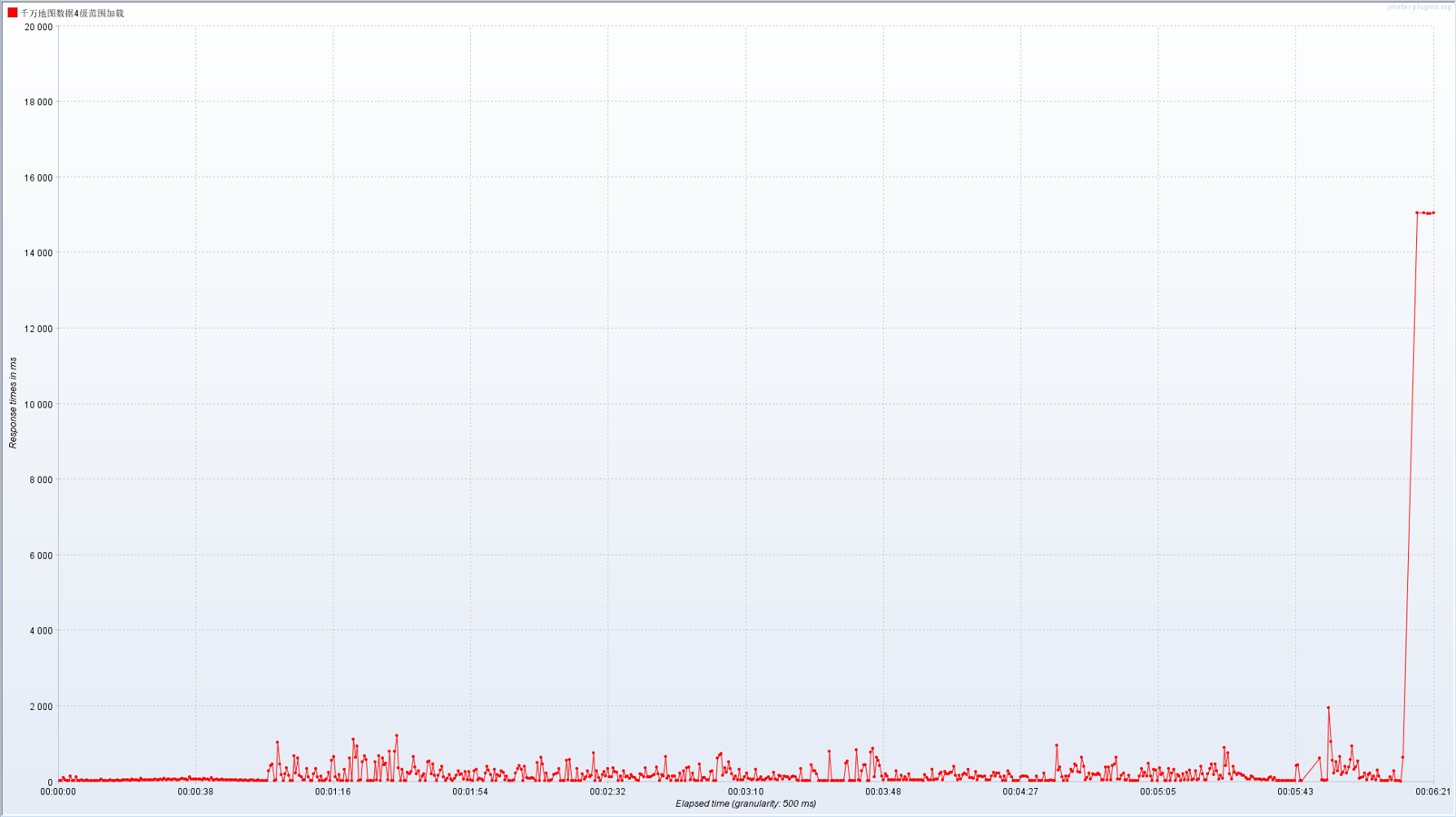

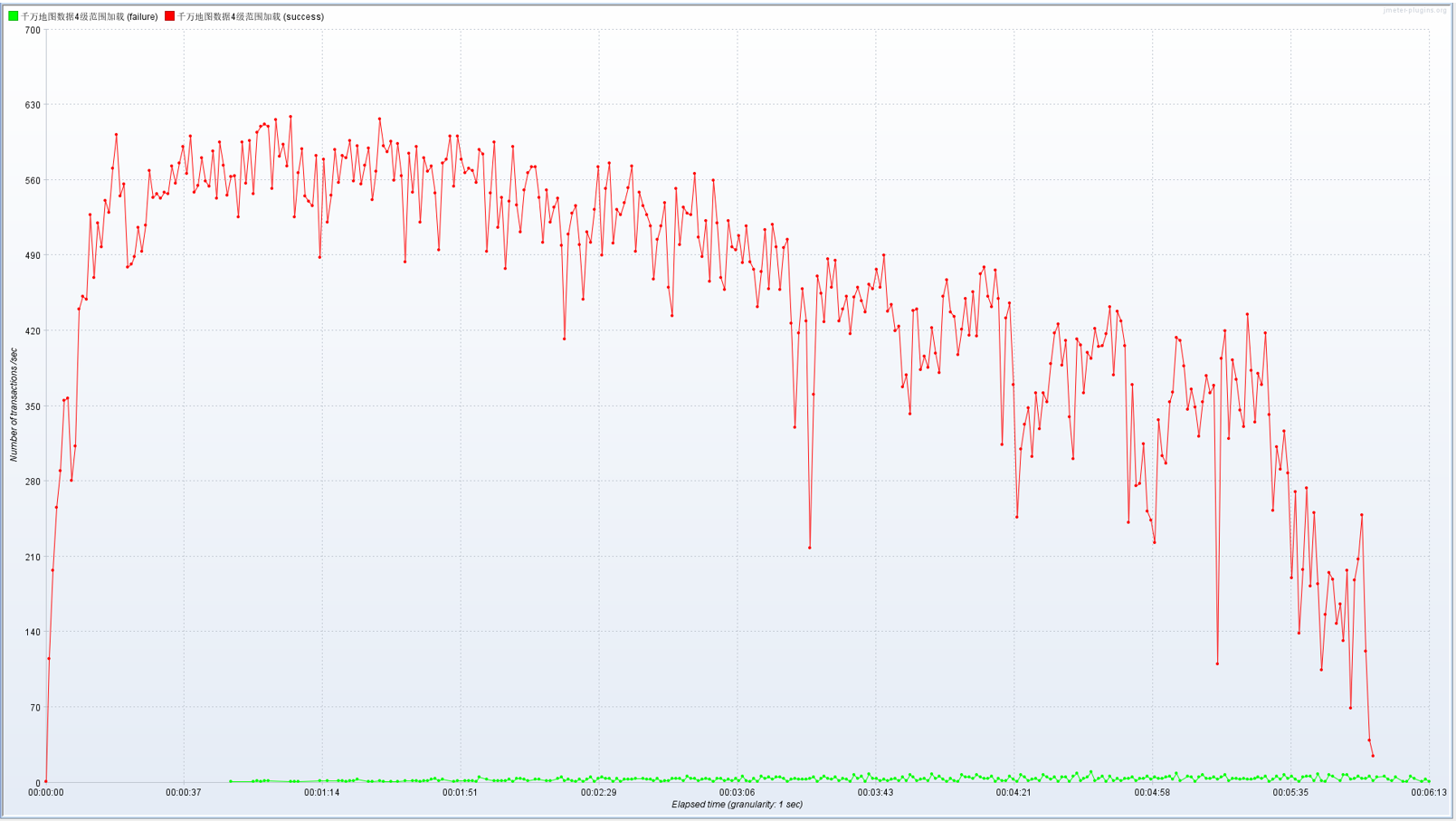

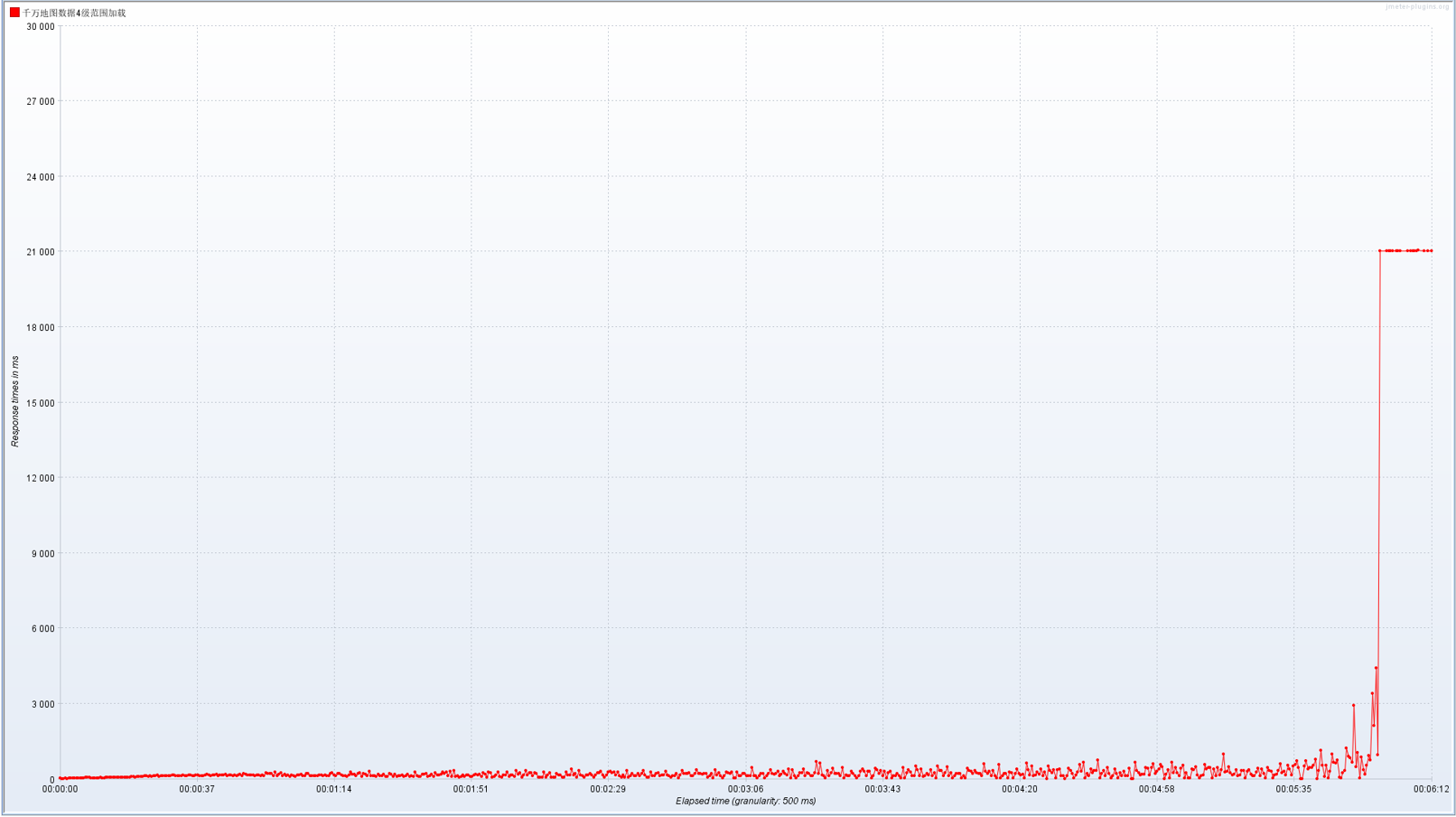

100 并发

本次采用递增模式压测,并发数为 100,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 4 级范围加载接口,模拟 100 用户并发时,发送给服务器的请求数量为 162938,平均响应时间为 206ms,本次测试中的异常为 0.61%,吞吐量(每秒完成的请求数)为 437.7/秒,每秒从服务器接收的数据量为 6641.78,每秒从服务器发送的数据量为 153.78。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

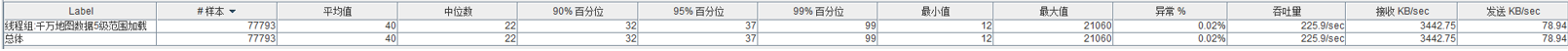

千万地图数据 5 级范围加载

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 5 级范围加载接口,模拟 10 用户并发时,发送给服务器的请求数量为 77793,平均响应时间为 40ms,本次测试中的异常为 0.02%,吞吐量(每秒完成的请求数)为 225.9/秒,每秒从服务器接收的数据量为 3442.75,每秒从服务器发送的数据量为 78.94。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

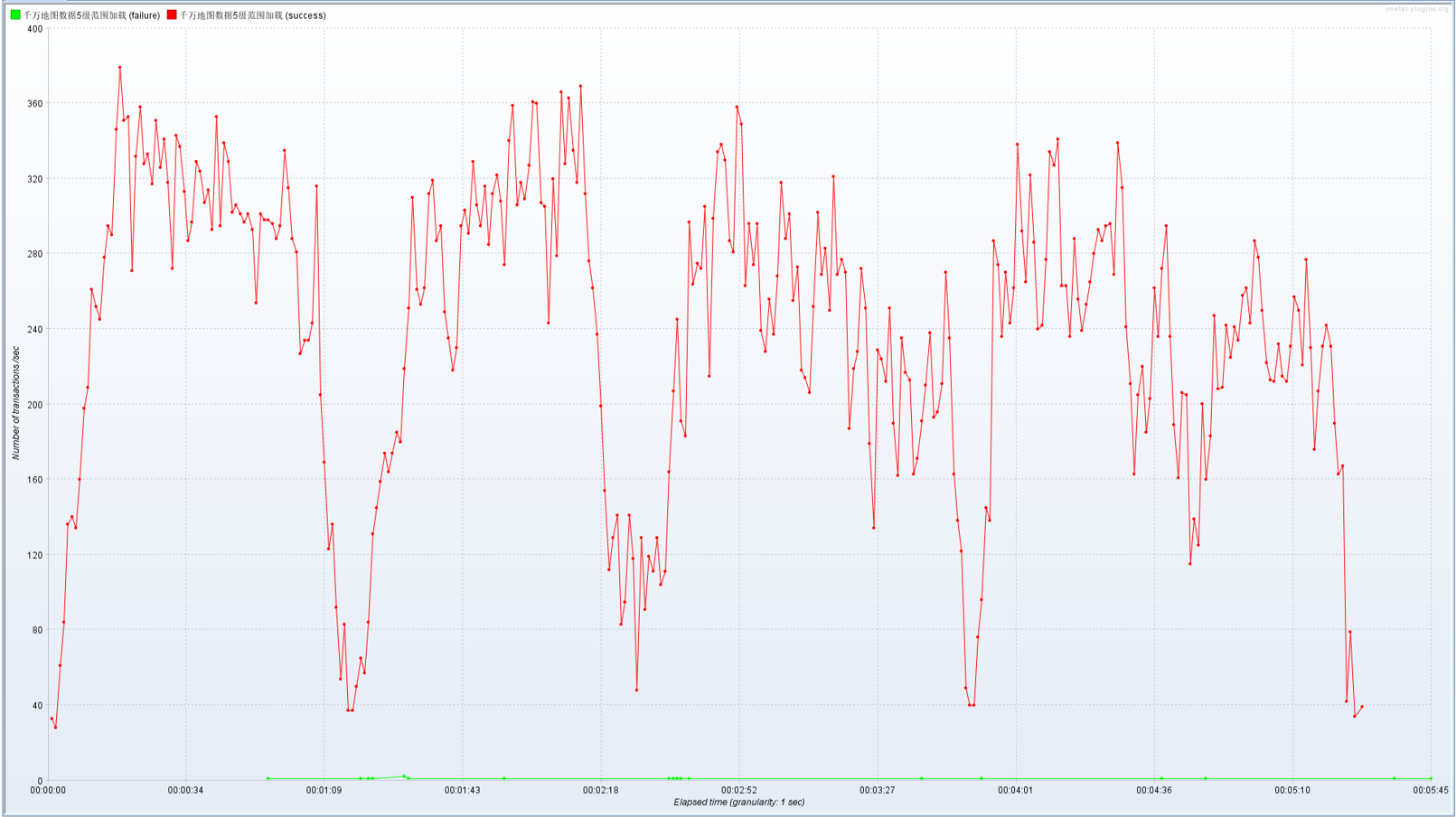

50 并发

本次采用递增模式压测,并发为 50,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 5 级范围加载接口,模拟 50 用户并发时,发送给服务器的请求数量为 127815,平均响应时间为 133ms,本次测试中的异常为 0.43%,吞吐量(每秒完成的请求数)为 330.3/秒,每秒从服务器接收的数据量为 5017.99,每秒从服务器发送的数据量为 114.98。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

100 并发

本次采用递增模式压测,并发数为 100,项目日志开启 info 状态。

聚合报告

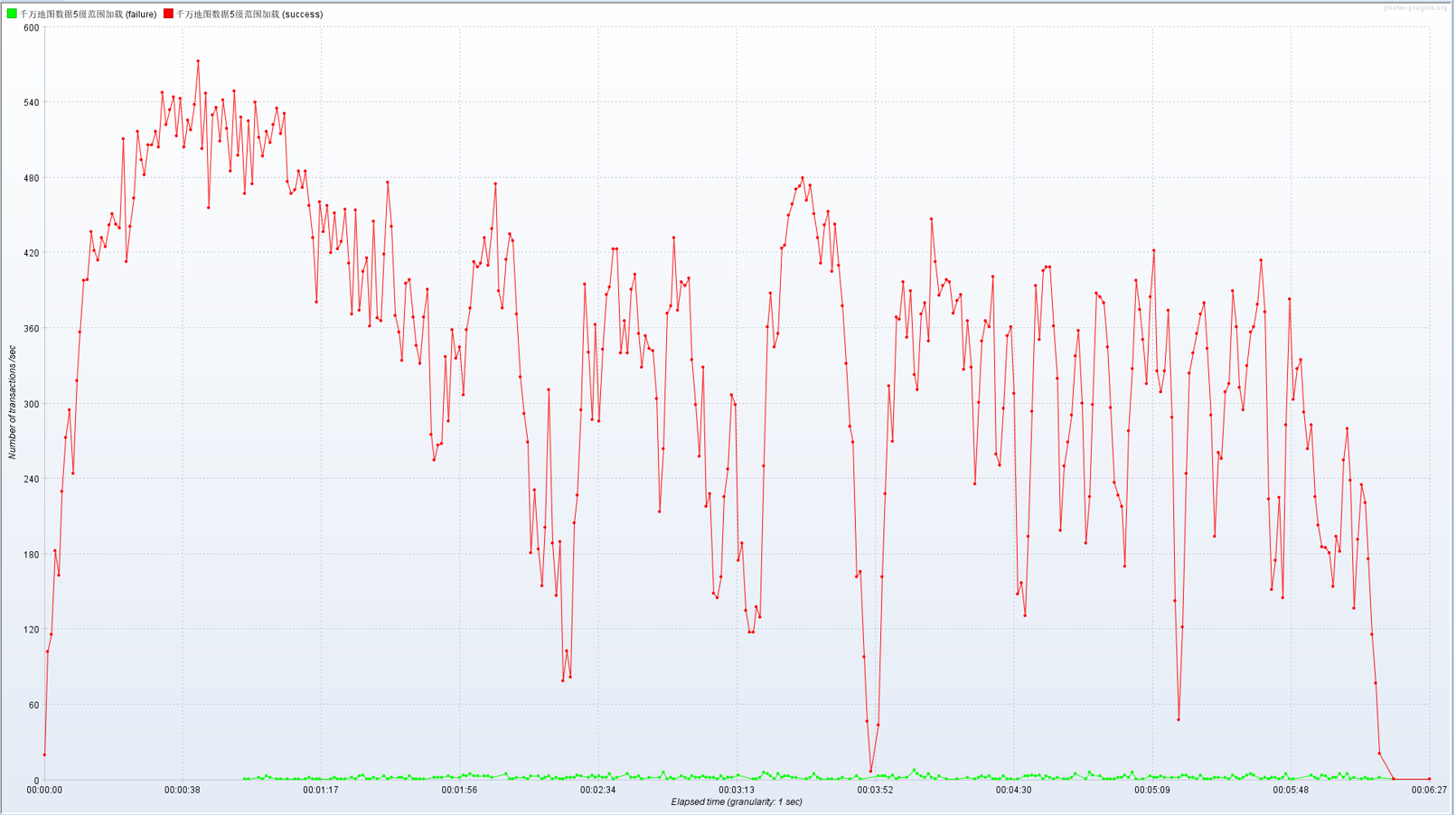

每秒的响应分布图(TPS)

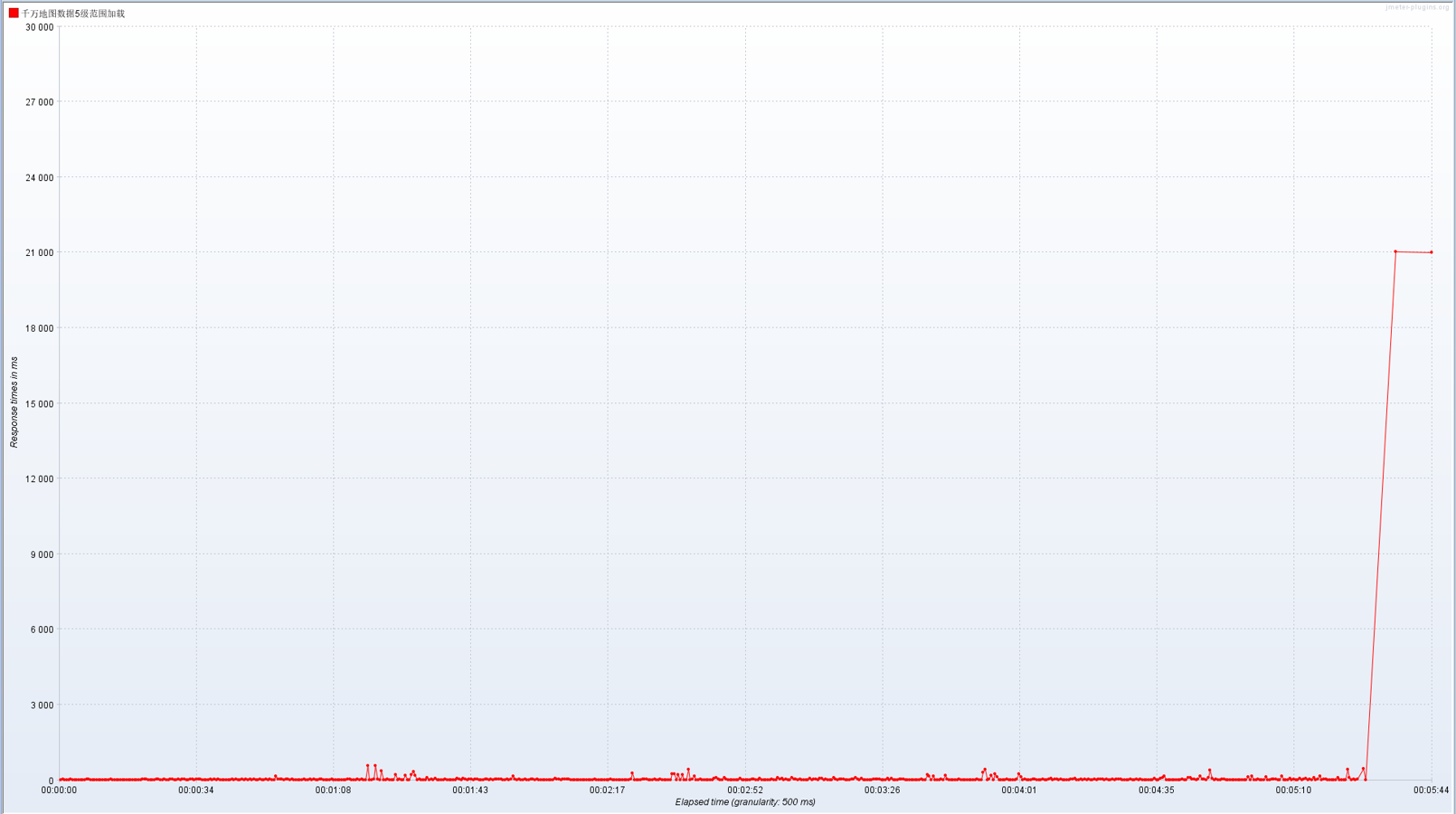

响应时间分布图(RT)

千万地图数据 5 级范围加载接口,模拟 100 用户并发时,发送给服务器的请求数量为 150934,平均响应时间为 224ms,本次测试中的异常为 0.73%,吞吐量(每秒完成的请求数)为 405.1/秒,每秒从服务器接收的数据量为 6139.06,每秒从服务器发送的数据量为 140.60。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

千万地图数据 6 级范围加载

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

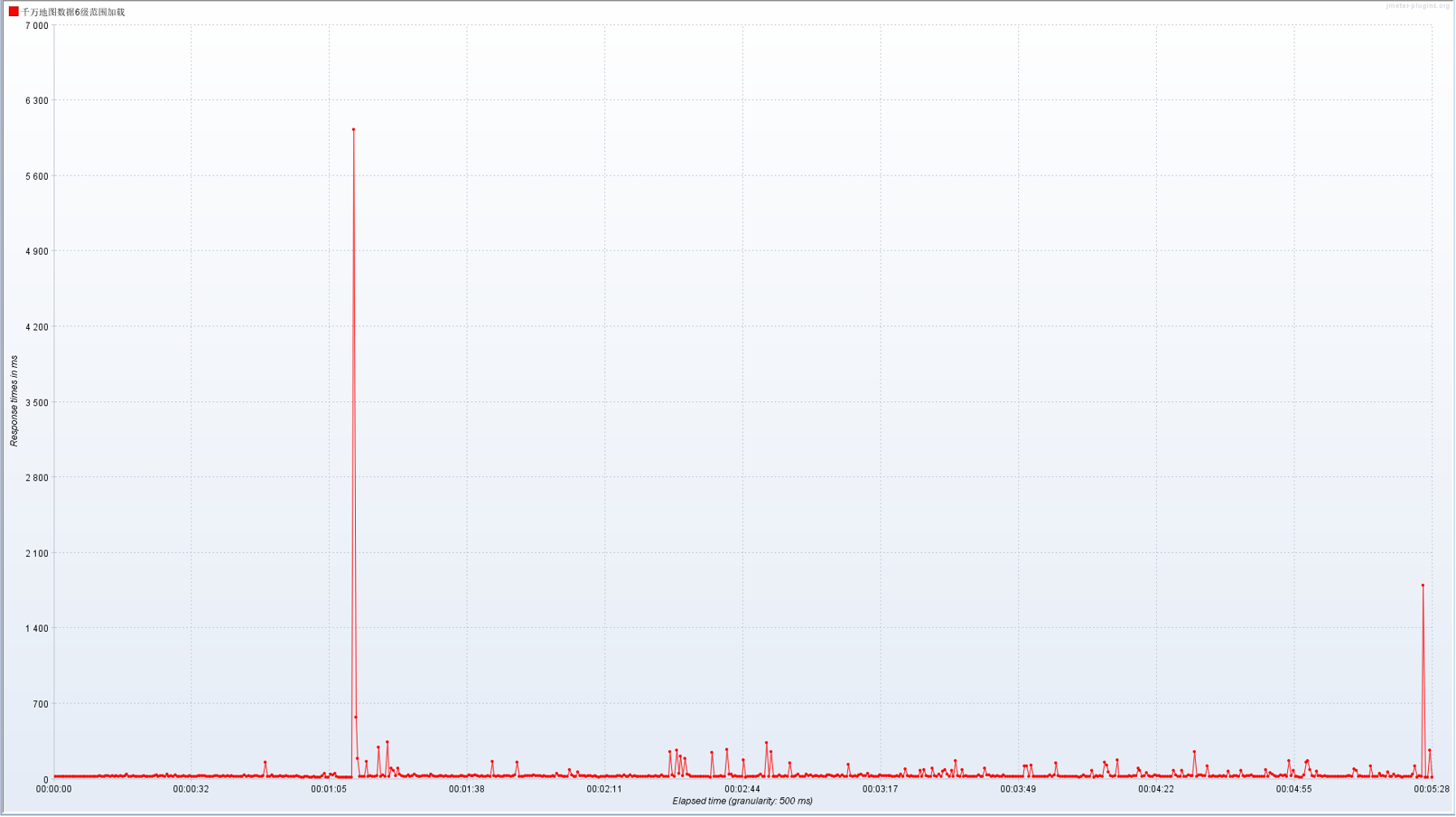

响应时间分布图(RT)

千万地图数据 6 级范围加载接口,模拟 10 用户并发时,发送给服务器的请求数量为 79885,平均响应时间为 39ms,本次测试中的异常为 0.03%,吞吐量(每秒完成的请求数)为 243.5/秒,每秒从服务器接收的数据量为 3710.99,每秒从服务器发送的数据量为 84.17。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

50 并发

本次采用递增模式压测,并发为 50,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

千万地图数据 6 级范围加载接口,模拟 50 用户并发时,发送给服务器的请求数量为 125417,平均响应时间为 136ms,本次测试中的异常为 0.42%,吞吐量(每秒完成的请求数)为 324.6/秒,每秒从服务器接收的数据量为 4929.38,每秒从服务器发送的数据量为 111.73。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

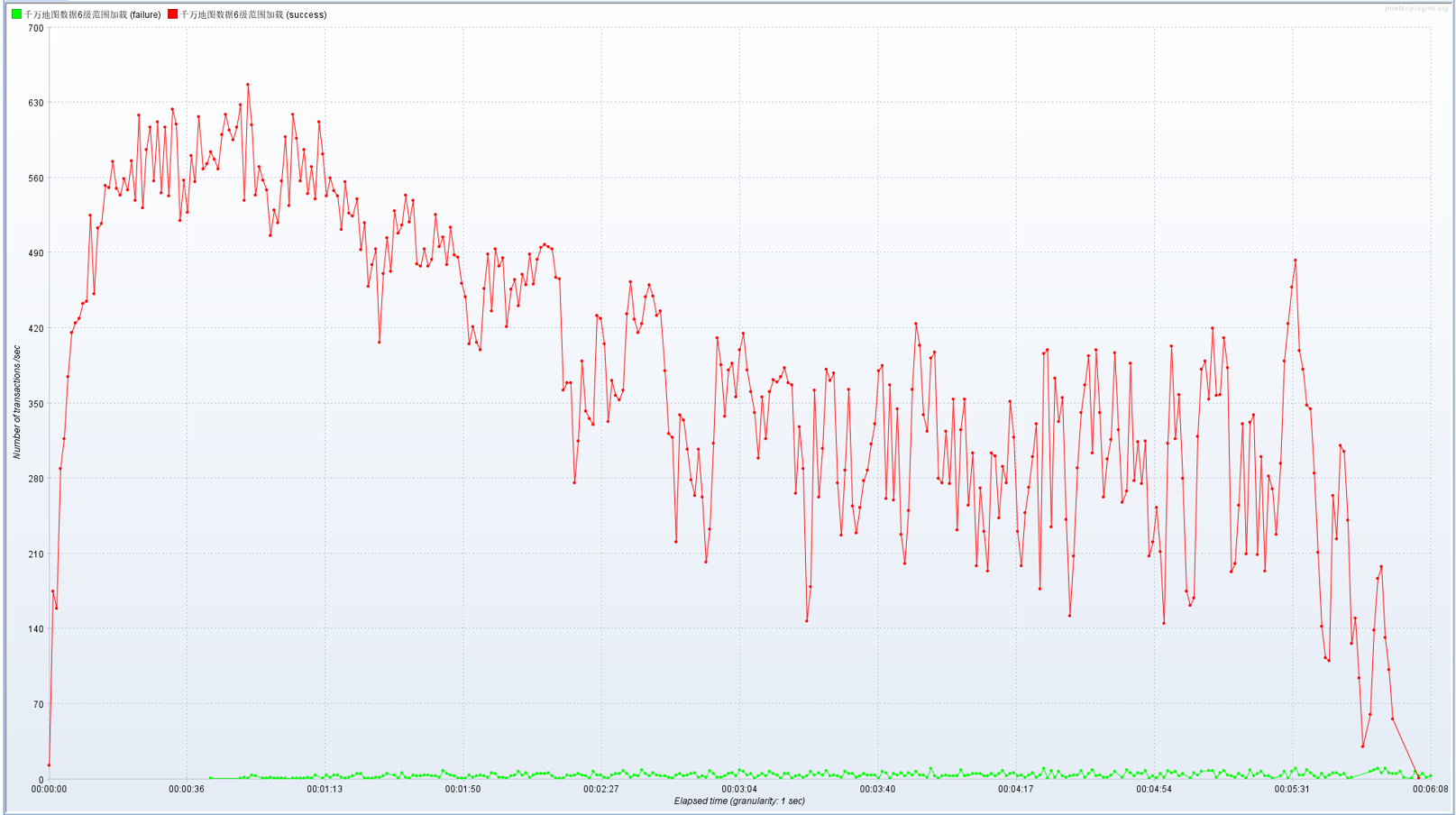

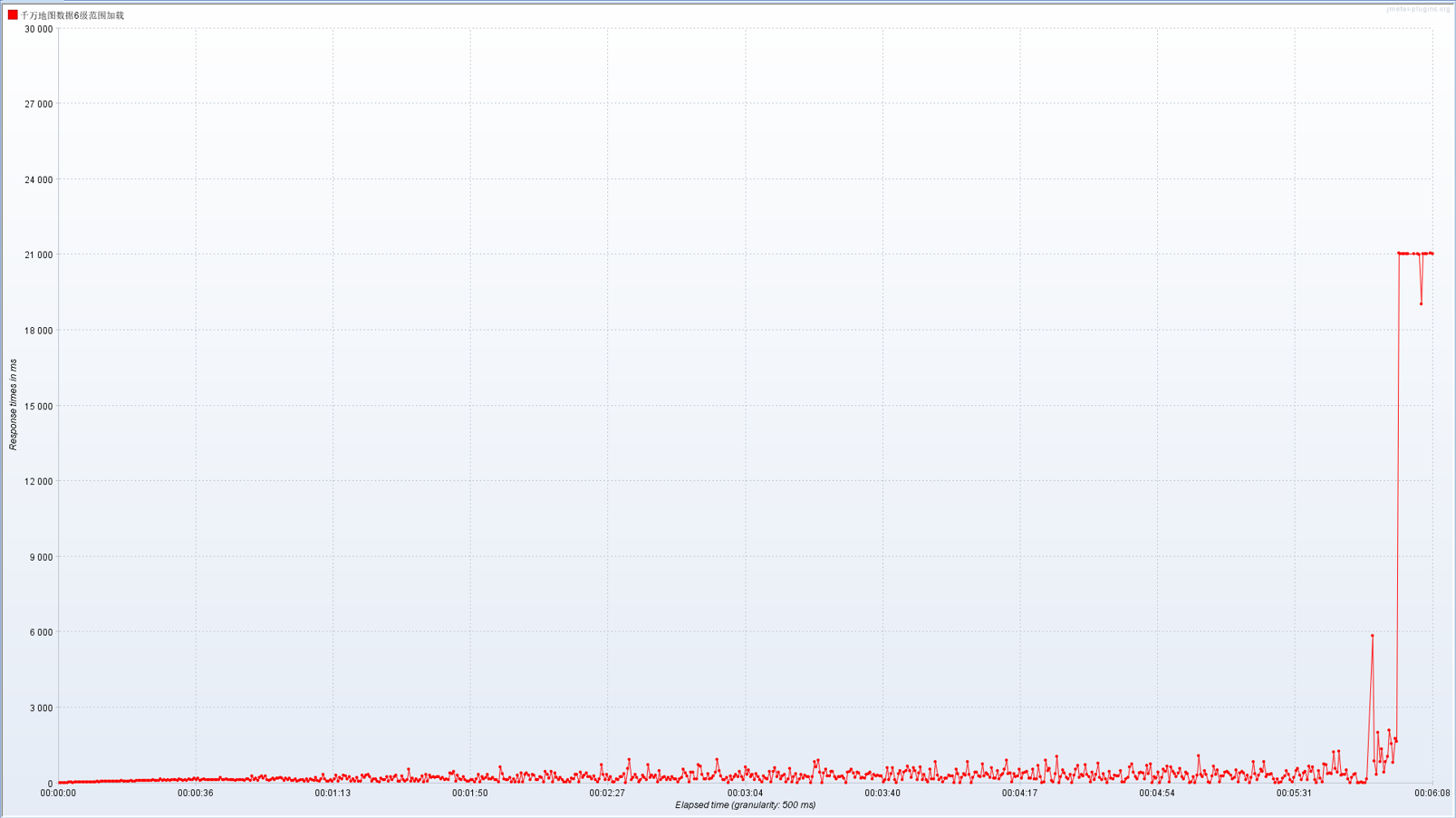

100 并发

本次采用递增模式压测,并发为 100,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

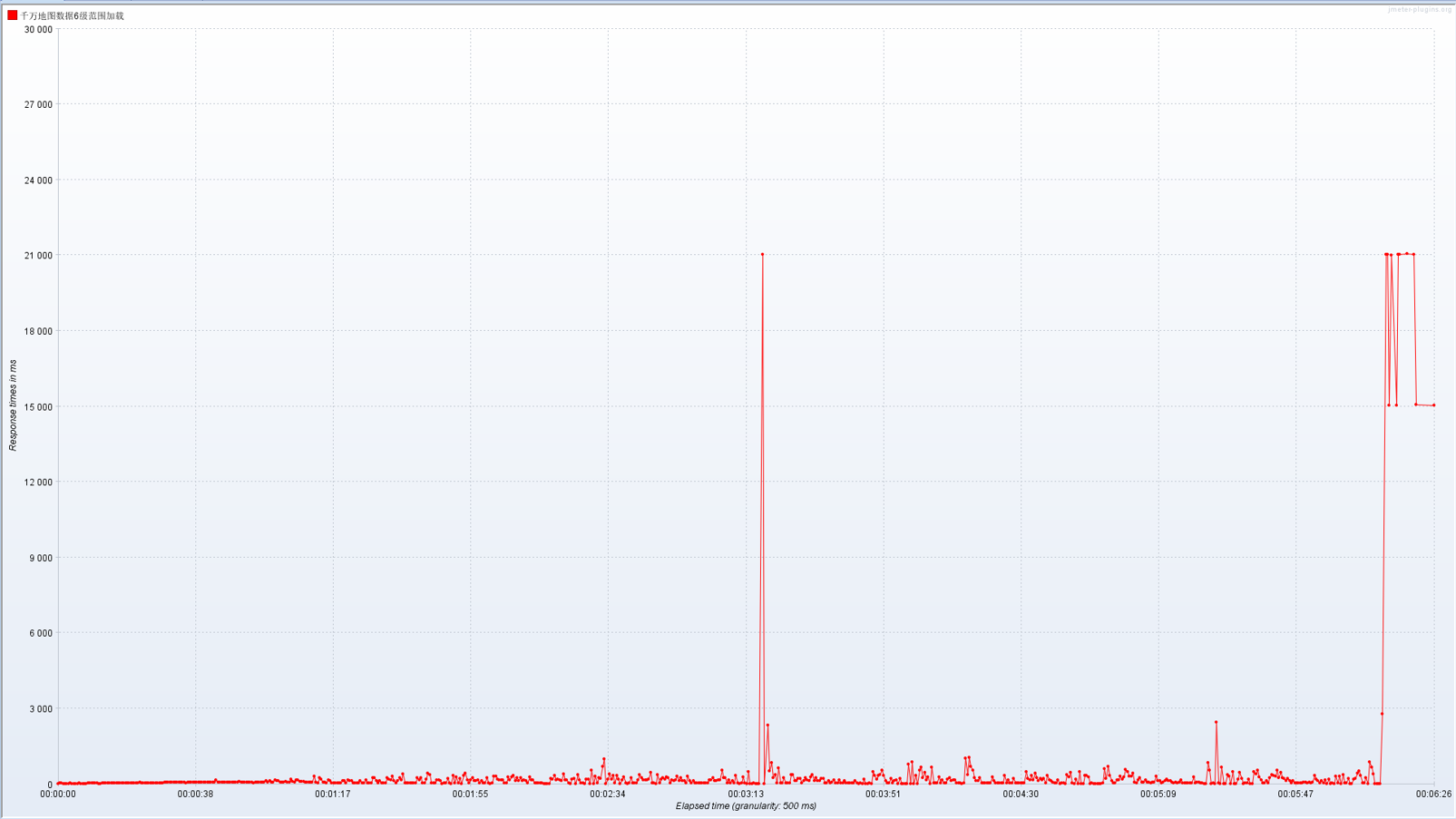

千万地图数据 6 级范围加载接口,模拟 100 用户并发时,发送给服务器的请求数量为 138678,平均响应时间为 243ms,本次测试中的异常为 0.88%,吞吐量(每秒完成的请求数)为 377.3/秒,每秒从服务器接收的数据量为 5708.22,每秒从服务器发送的数据量为 129.28。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

国产数据库及其 GIS 插件适配性测试

以下测试在网络相同、测试数据相同、数据库相互不影响的条件下进行。时间均为六次测试的平均时间。

| 测试对象 | Highgo | Kingbase |

|---|---|---|

| 导入数据 | 14.83s | 19.30s |

| Sql 增加数据 | 45.303s | 47.597s |

| Sql 修改数据 | 27.652s | 出现中断服务器,第二次 32s |

| Sql 修改数据(id 大于 500 万) | 36.821s | 38.176s |

| Sql 查询数据 | 32.371s | 39.611s |

| Sql 删除数据 | 1.292s | 15.231s |

| 安装难易及相关文档完善 | 文档一般,rpm 安装,安装后有无法连接情况 | 文档详细,ios 选择配置式安装 |

| 图形化安装 | 支持 | 支持 |

| 适配平台及使用情况 | 已与国内整机厂商、CPU 厂商、操作系统厂商、中间件厂商等生态合作伙伴完成了兼容适配。 | 支持 Linux、Windows、国产 Kylin 等数十个操作系统产品版本,支持通用 x86_64 及国产龙芯、飞腾、申威等 CPU 硬件体系架构。 |

| 社区情况 | 社区成型 | 社区完善 |

| Postgis | 替换安装 | 替换安装 |

| Windows 使用 | 配套工具图形数据无显示,navicat,qgis 显示正常,有 postgis 扩展 | 无 postgis 扩展 |

| QGIS | 可直接导入数据使用 | 需要更改程序后导入数据 |

| ST_Intersects | 2.233s | 3.825s |

| ST_AsGeoJSON | 6.1s | 6.3s |

| st_simplify | 2.7s | 2.7s |

| st_removerepeatedpoints | 6.1s | 6.1s |

| ST_Area | 0.381s | 0.385s |

数据测试方式

#数据新增

create table tbl_test (id int, info text, c_time timestamp);

insert into tbl_test select generate_series(1,10000000),md5(random()::text),clock_timestamp();

#数据修改

UPDATE tbl_test SET info='1' where id <=5000000;

UPDATE tbl_test SET info='1' where id >5000000;

#查询数据

SELECT id,info,c_time from tbl_test WHERE id <=5000000;

-- 测试数据:125万宗地数据

-- PG函数测试

-- 相交

select t1.id,t1.t_zd_zd_zl,t2.id,t2.ghdy from bb t1,bmh_copy1 t2 where ST_Intersects(t1.geom ,t2.geom)

-- 几何转geojson

select ST_AsGeoJSON(geom) from bb

-- 抽稀

select st_simplify(geom, 0.00003) from bb

-- 去重复点

select st_removerepeatedpoints(geom) from bb

-- 保留坐标小数点位数

select ST_AsGeoJSON(geom, 6) from bb

-- 面积测量

select ST_Area(geom) from bb相关资源:中国数据库排行 - 墨天轮

数据库压测

工具说明

pgbench 是 PostgreSQL 数据库的官方性能测试工具,它用于模拟数据库工作负载,执行事务和查询,以评估 PostgreSQL 数据库的性能。pgbench 的主要用途是测量数据库的吞吐量、延迟和并发性能。下面是关于 pgbench 的一些重要信息:

pgbench 主要功能和用途:

- 性能测试:pgbench 允许您模拟多个并发用户,执行包含插入、更新、删除和查询等操作的数据库事务,以测量数据库的性能。您可以调整并发用户数、测试时间和事务脚本以适应您的需求。

- 模拟工作负载:通过提供灵活的事务脚本,pgbench 可以模拟不同类型的数据库工作负载,包括读取密集型、写入密集型和混合型。这使您能够评估数据库在不同负载下的性能表现。

- 测量吞吐量和延迟:pgbench 输出包括每秒事务数(TPS)、每秒查询数(QPS)以及平均事务响应时间等性能指标,使您能够评估数据库的性能表现。

- 并发性测试:您可以通过配置并发用户数来测试数据库的并发性能。这有助于确定数据库在多个客户端同时访问时的表现。

主要参数说明:

-F: 填充因子,用给定的百分比填充 4 个表,默认是 100。-s: 比例因子,将生成的行数乘以给定的数,例如,在默认情况下,比例因子为 1,pgbench_accounts 表会创建 100,000 行,当-s 100 即会创建 10,000,000 行。--index-tablespace: 在给定的表空间而不是默认空间中创建索引。--tablespace:在指定的表空间而不是默认表空间中创建表。--unlogged-tables:把所有的表创建为非日志记录表而不是永久表。

基准测试

参数说明

-c: 模拟的客户端数量,也就是并发数据库会话数量。默认为 1。-C:为每一个事务建立一个新连接,而不是只为每个客户端会话建立一个连接。这对于度量连接开销有用。-D: 为用户自定义脚本传递参数VARNAME=VALUE,可传递多组。-f: 自定义脚本名称-j: pgbench 中的工作者线程数量。在多 CPU 机器上使用多于一个线程会有用。客户端会尽可能均匀地分布到可用的线程上。默认为 1。-l:把与每一个事务相关的信息写到一个日志文件中。-M:要用来提交查询到服务器的协议:

simple:使用简单查询协议。

extended 使用扩展查询协议。

prepared:使用带预备语句的扩展查询语句。-n:运行性能测试之前不执行 VACUUM 操作。VACUUM 是 PostgreSQL 数据库中的一项维护任务,用于清理和重新组织表中的数据以提高性能。-N:默认情况下,pgbench 在性能测试期间会更新pgbench_tellers和pgbench_branches这两个表,以模拟数据库的写入操作。然而,有时在性能测试中,您可能希望禁止对这两个表的更新,以更好地模拟只读操作的性能场景,-N 正是此作用。-r:在基准结束后,报告平均的每个命令的每语句等待时间(从客户端的角度来说是执行时间)。-S:执行内建的只有选择的脚本。是-b select-only 简写形式。-t:每个客户端运行的事务数量。默认为 10。-T:运行测试这么多秒,而不是为每个客户端运行固定数量的事务。-t 和-T 是互斥的。-v:在运行测试前清理所有四个标准的表。在没有用-n以及-v时, pgbench 将只清理 pgbench_tellers 和 pgbench_branches 表,并且截断 pgbench_history。

报告说明

transaction type: TPC-B (sort of):事务类型。这里指明测试使用了 TPC-B 类似的事务,表明测试是模拟了一种类似于 TPC-B 基准测试的工作负载。scaling factor: 1:比例因子。这是数据库规模的指标,通常用于模拟数据库的大小。1 表示数据库规模是原始大小,没有扩展。query mode: extended:查询模式。这是指 pgbench 使用的查询模式,这里是扩展查询模式,表示测试中包含了较复杂的查询。number of clients: 10:客户端数量。测试中模拟的并发客户端数量,即同时连接到数据库的客户端数。number of threads: 2:线程数量。每个客户端使用的线程数量,这里是每个客户端使用 2 个线程。number of transactions per client: 100:每个客户端执行的事务数。每个客户端模拟执行的事务数量。number of transactions actually processed: 1000/1000:实际处理的事务数量。这表示测试成功执行了 1000 个事务,与预期的 1000 个事务相符。tps = 45.268234 (including connections establishing):每秒事务数(TPS),包括连接建立。这是测试期间数据库处理的平均事务数量,包括连接建立的时间。在这个数据中,每秒完成了大约 45.27 个事务。tps = 45.289643 (excluding connections establishing):每秒事务数(TPS),不包括连接建立。这是测试期间数据库处理的平均事务数量,不包括连接建立的时间。在这个数据中,每秒完成了大约 45.29 个事务。statement latencies in milliseconds: 查询语句的延迟时间(以毫秒为单位)。这部分提供了每个查询语句的平均执行时间,以便了解查询的性能情况。每个查询语句都有其自己的执行时间。

Kingbase

初始化测试数据

./kbbench -h 127.0.0.1 -p 8092 -U kingbase --initialize -d pgtest

20并发10线程

./kbbench -c 20 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 8092 -U kingbase -d pgtest

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 20

number of threads: 10

duration: 600 s

number of transactions actually processed: 301063

latency average = 39.865 ms

tps = 501.698395 (including connections establishing)

tps = 501.726618 (excluding connections establishing)

statement latencies in milliseconds:

0.010 \set aid random(1, 100000 * :scale)

0.007 \set bid random(1, 1 * :scale)

0.006 \set tid random(1, 10 * :scale)

0.012 \set delta random(-5000, 5000)

0.212 BEGIN;

0.364 UPDATE kbbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.269 SELECT abalance FROM kbbench_accounts WHERE aid = :aid;

23.948 UPDATE kbbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

13.135 UPDATE kbbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.357 INSERT INTO kbbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

1.531 END;80并发10线程

./kbbench -c 80 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 8092 -U kingbase -d pgtest

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 80

number of threads: 10

duration: 600 s

number of transactions actually processed: 278124

latency average = 172.675 ms

tps = 463.298596 (including connections establishing)

tps = 463.327583 (excluding connections establishing)

statement latencies in milliseconds:

0.015 \set aid random(1, 100000 * :scale)

0.010 \set bid random(1, 1 * :scale)

0.008 \set tid random(1, 10 * :scale)

0.008 \set delta random(-5000, 5000)

0.238 BEGIN;

0.486 UPDATE kbbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.300 SELECT abalance FROM kbbench_accounts WHERE aid = :aid;

150.355 UPDATE kbbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

18.987 UPDATE kbbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.366 INSERT INTO kbbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

1.760 END;100并发10线程

./kbbench -c 100 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 8092 -U kingbase -d pgtest

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 100

number of threads: 10

duration: 600 s

number of transactions actually processed: 266777

latency average = 225.012 ms

tps = 444.420416 (including connections establishing)

tps = 444.443498 (excluding connections establishing)

statement latencies in milliseconds:

0.014 \set aid random(1, 100000 * :scale)

0.010 \set bid random(1, 1 * :scale)

0.008 \set tid random(1, 10 * :scale)

0.008 \set delta random(-5000, 5000)

0.204 BEGIN;

0.509 UPDATE kbbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.301 SELECT abalance FROM kbbench_accounts WHERE aid = :aid;

156.751 UPDATE kbbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

19.797 UPDATE kbbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.361 INSERT INTO kbbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

1.875 END;150并发10线程

./kbbench -c 150 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 8092 -U kingbase -d pgtest

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 150

number of threads: 10

duration: 600 s

number of transactions actually processed: 249824

latency average = 360.465 ms

tps = 416.129559 (including connections establishing)

tps = 416.144235 (excluding connections establishing)

statement latencies in milliseconds:

0.015 \set aid random(1, 100000 * :scale)

0.010 \set bid random(1, 1 * :scale)

0.008 \set tid random(1, 10 * :scale)

0.008 \set delta random(-5000, 5000)

0.226 BEGIN;

0.587 UPDATE kbbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.311 SELECT abalance FROM kbbench_accounts WHERE aid = :aid;

191.163 UPDATE kbbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

21.292 UPDATE kbbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.390 INSERT INTO kbbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

1.998 END;Higo

初始化测试数据

./pgbench -h 127.0.0.1 -p 5866 -U sysdba --initialize -d highgo

20并发10线程

./pgbench -c 20 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 5866 -U sysdba -d highgo

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 20

number of threads: 10

duration: 600 s

number of transactions actually processed: 183881

latency average = 65.269 ms

tps = 306.423778 (including connections establishing)

tps = 306.449055 (excluding connections establishing)

statement latencies in milliseconds:

0.012 \set aid random(1, 100000 * :scale)

0.013 \set bid random(1, 1 * :scale)

0.008 \set tid random(1, 10 * :scale)

0.013 \set delta random(-5000, 5000)

0.344 BEGIN;

0.424 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.310 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

39.135 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

21.865 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.467 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

2.645 END;80并发10线程

./pgbench -c 80 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 5866 -U sysdba -d highgo

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 80

number of threads: 10

duration: 600 s

number of transactions actually processed: 132715

latency average = 362.560 ms

tps = 220.653200 (including connections establishing)

tps = 220.678099 (excluding connections establishing)

statement latencies in milliseconds:

0.015 \set aid random(1, 100000 * :scale)

0.012 \set bid random(1, 1 * :scale)

0.011 \set tid random(1, 10 * :scale)

0.011 \set delta random(-5000, 5000)

0.426 BEGIN;

0.795 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.352 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

316.679 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

39.003 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.564 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

3.899 END;100并发10线程

./pgbench -c 100 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 5866 -U sysdba -d highgo

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 100

number of threads: 10

duration: 600 s

number of transactions actually processed: 237356

latency average = 252.963 ms

tps = 395.314312 (including connections establishing)

tps = 395.332985 (excluding connections establishing)

statement latencies in milliseconds:

0.014 \set aid random(1, 100000 * :scale)

0.011 \set bid random(1, 1 * :scale)

0.010 \set tid random(1, 10 * :scale)

0.009 \set delta random(-5000, 5000)

0.229 BEGIN;

0.580 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.293 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

226.518 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

22.555 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.344 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

2.183 END;150并发10线程

./pgbench -c 150 -j 10 -M extended -n -T 600 -r -h 127.0.0.1 -p 5866 -U sysdba -d highgo

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 1

query mode: extended

number of clients: 150

number of threads: 10

duration: 600 s

number of transactions actually processed: 228893

latency average = 393.673 ms

tps = 381.026638 (including connections establishing)

tps = 381.043226 (excluding connections establishing)

statement latencies in milliseconds:

0.012 \set aid random(1, 100000 * :scale)

0.011 \set bid random(1, 1 * :scale)

0.009 \set tid random(1, 10 * :scale)

0.009 \set delta random(-5000, 5000)

0.217 BEGIN;

1.049 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.294 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

365.414 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

23.522 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.573 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

2.047 END;程序适配与压测

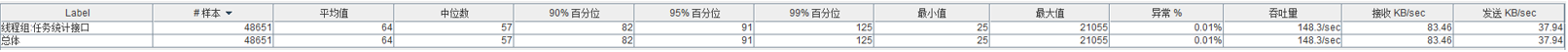

10 并发

本次采用递增模式压测,并发为 10,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

任务统计接口,模拟 10 用户并发时,发送给服务器的请求数量为 48651,平均响应时间为 64ms,本次测试中的异常为 0.01%,吞吐量(每秒完成的请求数)为 148.3/秒,每秒从服务器接收的数据量为 83.46,每秒从服务器发送的数据量为 37.94。并发用户数为 10 的情况下,系统响应时间 ≤1 秒,满足要求。

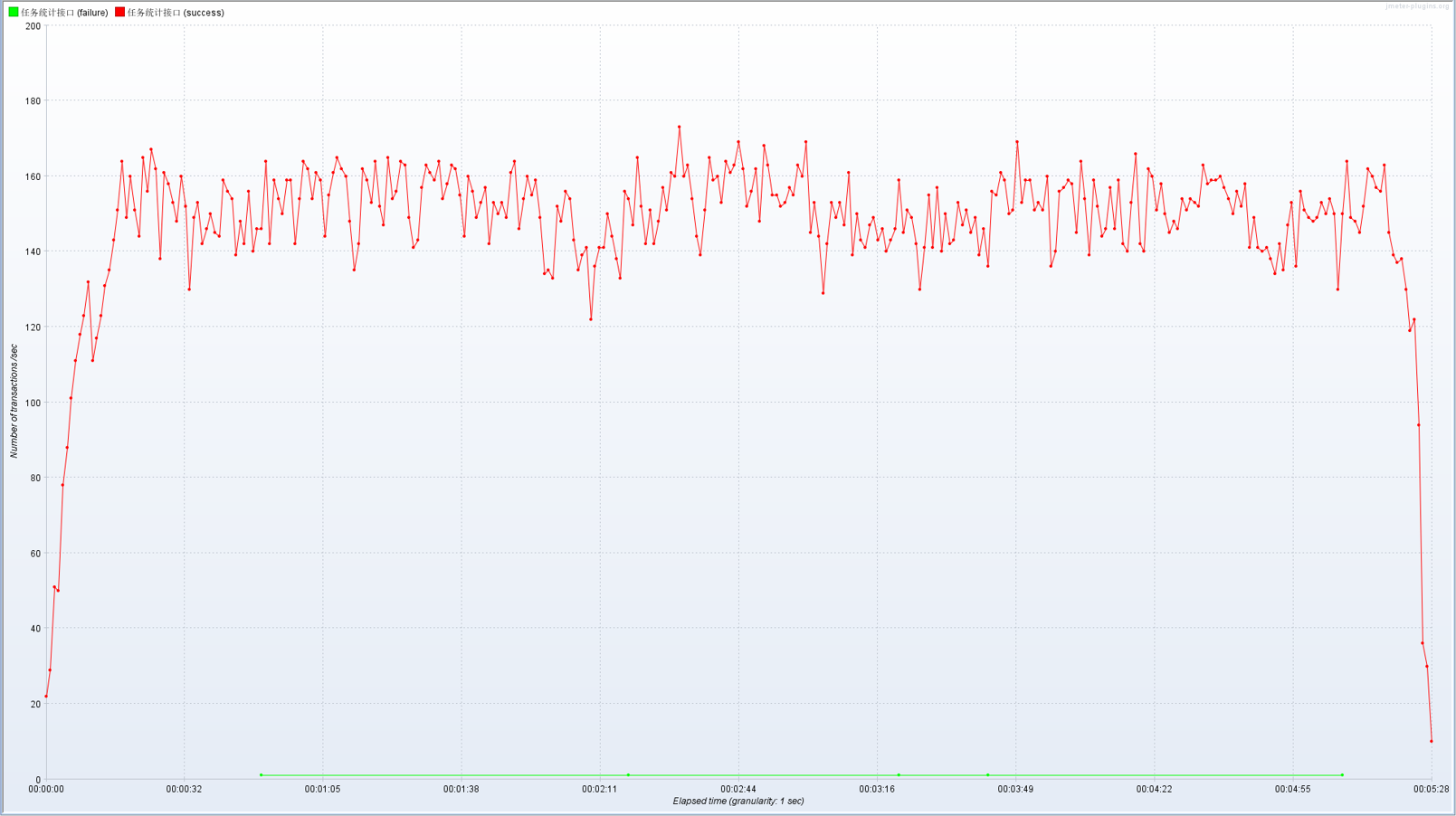

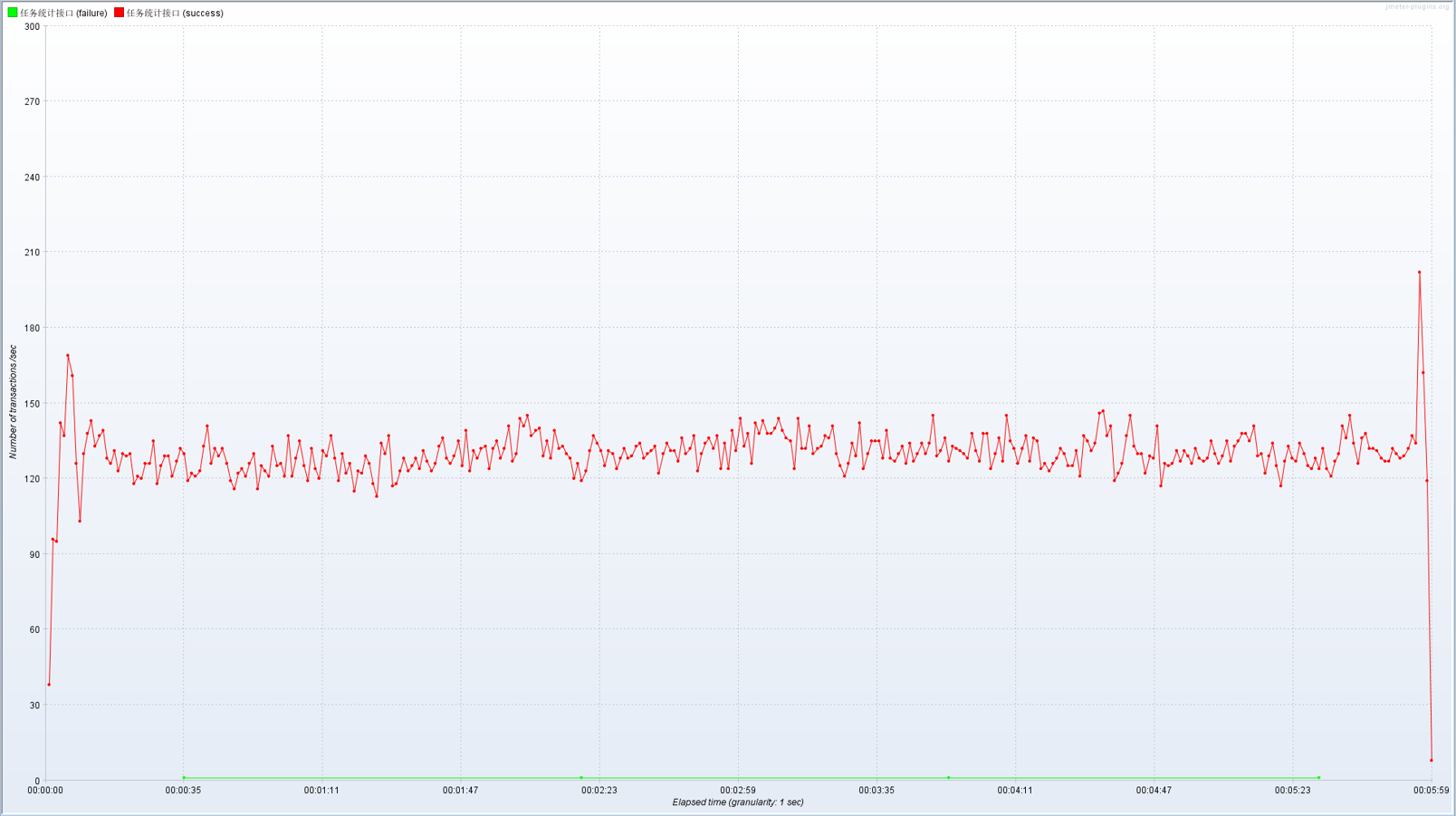

50 并发

本次采用递增模式压测,并发为 50,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

任务统计接口,模拟 50 用户并发时,发送给服务器的请求数量为 48763,平均响应时间为 345ms,本次测试中的异常为 0.01%,吞吐量(每秒完成的请求数)为 130.7/秒,每秒从服务器接收的数据量为 73.55,每秒从服务器发送的数据量为 33.44。并发用户数为 50 的情况下,系统响应时间 ≤1 秒,满足要求。

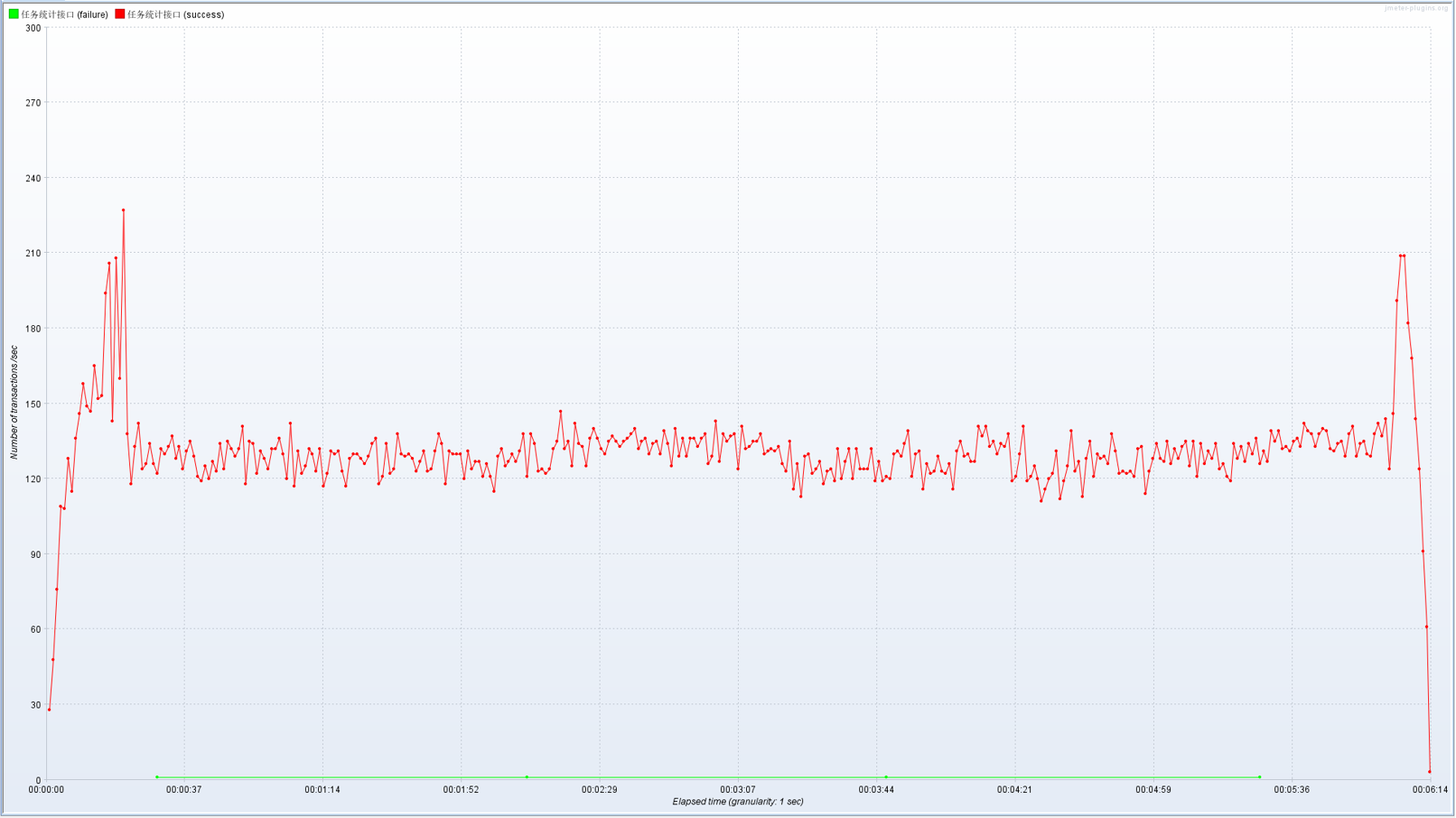

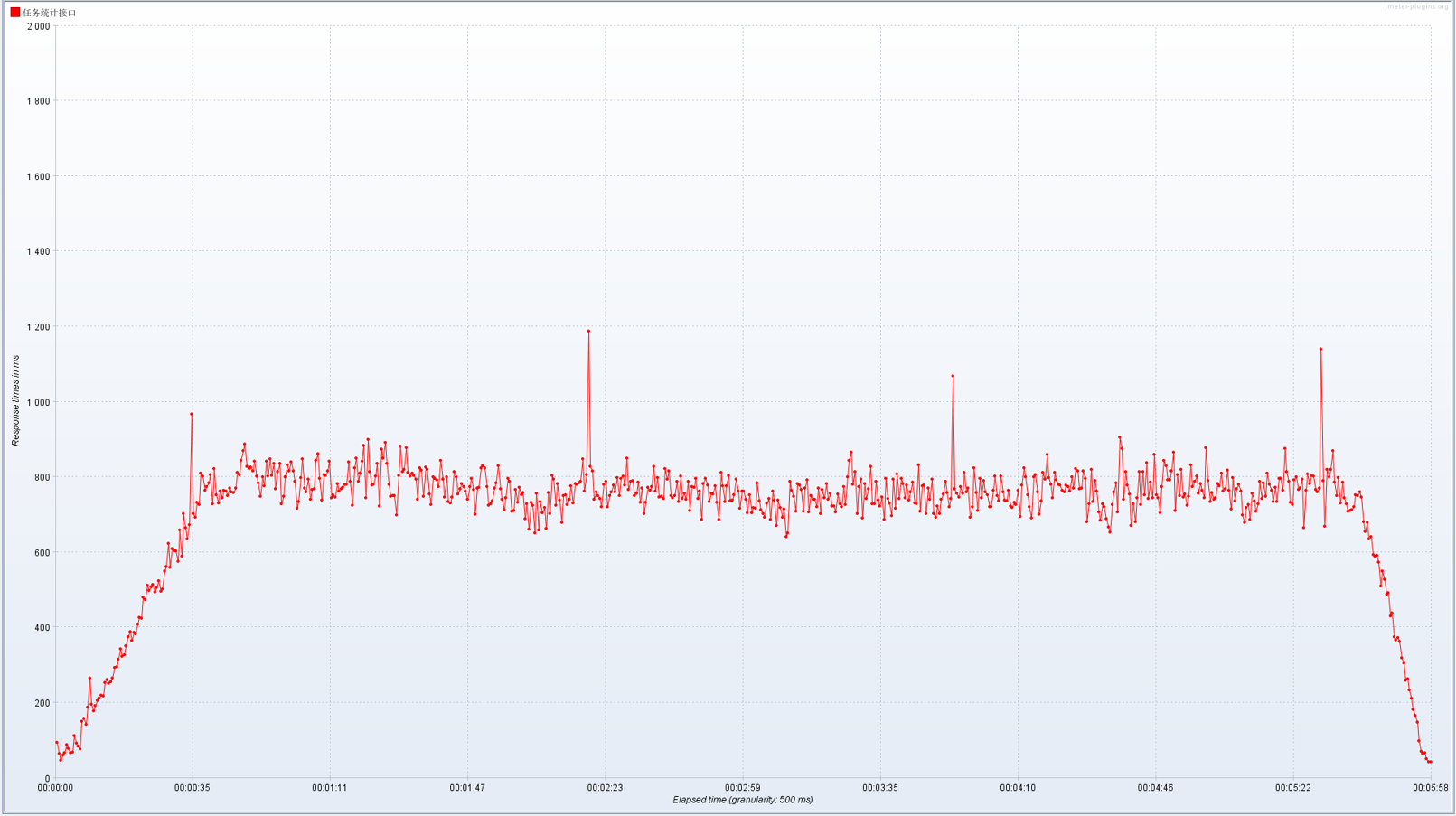

100 并发

本次采用递增模式压测,并发为 100,项目日志开启 info 状态。

聚合报告

每秒的响应分布图(TPS)

响应时间分布图(RT)

任务统计接口,模拟 100 用户并发时,发送给服务器的请求数量为 46601,平均响应时间为 706ms,本次测试中的异常为 0.01%,吞吐量(每秒完成的请求数)为 130.2/秒,每秒从服务器接收的数据量为 73.24,每秒从服务器发送的数据量为 33.30。并发用户数为 100 的情况下,系统响应时间 ≤1 秒,满足要求。

测试总结

本次测试对千万地图数据 1 级范围加载接口、千万地图数据 2 级范围加载接口、千万地图数据 3 级范围加载接口、千万地图数据 4 级范围加载接口、千万地图数据 5 级范围加载接口、千万地图数据 6 级范围加载接口、任务统计接口等进行了压力测试,通过对测试数据进行分析,并发用户数 10、50、100 逐渐递增,根据测试过程及结果分析出上述接口在同时并发时,平均响应时间良好,逐步递增的情况下,并且发送给服务器的请求数量较大时,响应时间有所增加,但也在正常范围内,符合系统运行要求,异常在 0.1%以内,属于正常范围;吞吐量也在正常范围内,满足系统运行需求。在系统测试过程中,系统在用户并发使用和反复运行中,并未出现不良反应;在并发用户数 100 的情况下,系统响应时间 ≤1 秒,系统稳定可靠。

中间件(Nginx、Redis)适配性测试

Nginx

测试结果总览:

| 并发数 | 总访问数 | 失败请求量 | 每秒事务数[s] | 平均事务响应时间[ms] |

|---|---|---|---|---|

| 100 | 10000 | 0 | 15168.61 | 6.593 |

| 1000 | 10000 | 0 | 12088.97 | 82.720 |

| 10000 | 10000 | 8385 | 13292.36 | 752.312 |

| 10000 | 100000 | 97523 | 18773.96 | 532.653 |

| 20000 | 100000 | 97441 | 335.82 | 59555.126 |

测试内容一:代理配置访问

相关配置如下:

http {

include mime.types;

default_type application/octet-stream;

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 8090;

server_name localhost;

root /opt/Projects/dist;

location / {

try_files $uri $uri/ @router;

index index.html index.htm;

client_max_body_size 600m;

}

location @router {

rewrite ^.*$ /index.html last;

}

location ^~/prod-api/ {

rewrite ^/prod-api/(.*)$ /$1 break;

proxy_pass http://127.0.0.1:8087;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}访问http://X.X.X.X:8090/index,可得到正确访问页面。

测试内容二:压测访问

压测工具:Apache bench

Apache bench 会创建多个并发访问线程,模拟多个访问者同时对某一 URL 地址进行访问。它的测试目标是基于 URL 的,因此,它既可以用来测试 apache 的负载压力,也可以测试 nginx、lighthttp、tomcat、IIS等其它 Web 服务器的压力。Apache bench 是一个命令行工具, Apache bench 对发出负载的计算机要求很低,它既不会占用很高 CPU,也不会占用很多内存。但却会给目标服务器造成巨大的负载,其原理类似 CC 攻击。

相关报告参数说明如下:

Document Path:测试页面

Document Length: 页面大小

Concurrency Level: 测试的并发数

Time taken for tests:整个测试持续的时间

Complete requests:完成的请求数量

Failed requests: 失败的请求数量

Total transferred: 整个过程中的网络传输量

HTML transferred: 整个过程中的 HTML 内容传输量

Requests per second: 最重要的指标之一,相当于 LR 中的每秒事务数,后面括号中的 mean 表示这是一个平均值

Time per request: 最重要的指标之二,相当于 LR 中的平均事务响应时间,后面括号中的 mean 表示这是一个平均值

Time per request: 每个连接请求实际运行时间的平均值

Transfer rate: 平均每秒网络上的流量,可以帮助排除是否存在网络流量过大导致响应时间延长的问题

100 并发,总量访问 10000

ab -c 100 -n 10000 http://X.X.X.X:8090/index

This is ApacheBench, Version 2.3 <$Revision: 1874286 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking X.X.X.X (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx/1.24.0

Server Hostname: X.X.X.X

Server Port: 8090

Document Path: /index

Document Length: 14998 bytes

Concurrency Level: 100

Time taken for tests: 0.659 seconds

Complete requests: 10000

Failed requests: 0

Total transferred: 152340000 bytes

HTML transferred: 149980000 bytes

Requests per second: 15168.61 [#/sec] (mean)

Time per request: 6.593 [ms] (mean)

Time per request: 0.066 [ms] (mean, across all concurrent requests)

Transfer rate: 225662.76 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 1 1.0 1 5

Processing: 0 6 2.7 5 15

Waiting: 0 5 2.3 5 15

Total: 0 7 2.2 6 16

Percentage of the requests served within a certain time (ms)

50% 6

66% 8

75% 8

80% 9

90% 10

95% 11

98% 11

99% 12

100% 16 (longest request)1000 并发,总量访问 10000

ab -c 1000 -n 10000 http://X.X.X.X:8090/index

This is ApacheBench, Version 2.3 <$Revision: 1874286 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking X.X.X.X (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx/1.24.0

Server Hostname: X.X.X.X

Server Port: 8090

Document Path: /index

Document Length: 14998 bytes

Concurrency Level: 1000

Time taken for tests: 0.827 seconds

Complete requests: 10000

Failed requests: 0

Total transferred: 152340000 bytes

HTML transferred: 149980000 bytes

Requests per second: 12088.97 [#/sec] (mean)

Time per request: 82.720 [ms] (mean)

Time per request: 0.083 [ms] (mean, across all concurrent requests)

Transfer rate: 179847.11 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 5 5.9 3 24

Processing: 2 14 25.8 8 223

Waiting: 0 12 25.7 6 221

Total: 3 19 28.4 11 234

Percentage of the requests served within a certain time (ms)

50% 11

66% 13

75% 14

80% 14

90% 48

95% 59

98% 93

99% 217

100% 234 (longest request)10000 并发,总量访问 10000

ab -c 10000 -n 10000 http://X.X.X.X:8090/index

This is ApacheBench, Version 2.3 <$Revision: 1874286 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking X.X.X.X (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx/1.24.0

Server Hostname: X.X.X.X

Server Port: 8090

Document Path: /index

Document Length: 0 bytes

Concurrency Level: 10000

Time taken for tests: 0.752 seconds

Complete requests: 10000

Failed requests: 8385

(Connect: 0, Receive: 0, Length: 1335, Exceptions: 7050)

Total transferred: 41350414 bytes

HTML transferred: 40692918 bytes

Requests per second: 13292.36 [#/sec] (mean)

Time per request: 752.312 [ms] (mean)

Time per request: 0.075 [ms] (mean, across all concurrent requests)

Transfer rate: 53676.22 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 170 81.0 194 268

Processing: 57 223 79.2 216 656

Waiting: 0 30 77.0 0 273

Total: 238 393 88.4 393 695

Percentage of the requests served within a certain time (ms)

50% 393

66% 398

75% 404

80% 408

90% 519

95% 595

98% 631

99% 663

100% 695 (longest request)10000 并发,总量访问 100000

ab -c 10000 -n 100000 http://X.X.X.X:8090/index

This is ApacheBench, Version 2.3 <$Revision: 1874286 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking X.X.X.X (be patient)

Completed 10000 requests

Completed 20000 requests

Completed 30000 requests

Completed 40000 requests

Completed 50000 requests

Completed 60000 requests

Completed 70000 requests

Completed 80000 requests

Completed 90000 requests

Completed 100000 requests

Finished 100000 requests

Server Software: nginx/1.24.0

Server Hostname: X.X.X.X

Server Port: 8090

Document Path: /index

Document Length: 0 bytes

Concurrency Level: 10000

Time taken for tests: 5.327 seconds

Complete requests: 100000

Failed requests: 97523

(Connect: 0, Receive: 0, Length: 18635, Exceptions: 78888)

Total transferred: 288072498 bytes

HTML transferred: 283608794 bytes

Requests per second: 18773.96 [#/sec] (mean)

Time per request: 532.653 [ms] (mean)

Time per request: 0.053 [ms] (mean, across all concurrent requests)

Transfer rate: 52815.05 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 263 458.6 147 3229

Processing: 62 196 171.1 151 3707

Waiting: 0 45 152.7 0 3487

Total: 168 459 529.5 325 4244

Percentage of the requests served within a certain time (ms)

50% 325

66% 350

75% 361

80% 400

90% 662

95% 1369

98% 2201

99% 3593

100% 4244 (longest request)20000 并发,总量访问 100000

ab -c 20000 -n 100000 http://X.X.X.X:8090/index

This is ApacheBench, Version 2.3 <$Revision: 1874286 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking X.X.X.X (be patient)

Completed 10000 requests

Completed 20000 requests

Completed 30000 requests

Completed 40000 requests

Completed 50000 requests

Completed 60000 requests

Completed 70000 requests

Completed 80000 requests

Completed 90000 requests

Completed 100000 requests

Finished 100000 requests

Server Software: nginx/1.24.0

Server Hostname: X.X.X.X

Server Port: 8090

Document Path: /index

Document Length: 0 bytes

Concurrency Level: 20000

Time taken for tests: 297.776 seconds

Complete requests: 100000

Failed requests: 97441

(Connect: 0, Receive: 0, Length: 15380, Exceptions: 82061)

Total transferred: 261648950 bytes

HTML transferred: 257202946 bytes

Requests per second: 335.82 [#/sec] (mean)

Time per request: 59555.126 [ms] (mean)

Time per request: 2.978 [ms] (mean, across all concurrent requests)

Transfer rate: 858.08 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 24233 11842.6 27981 37425

Processing: 90 18430 37760.5 384 244569

Waiting: 0 2697 8890.6 0 37225

Total: 171 42663 39232.2 34202 278455

Percentage of the requests served within a certain time (ms)

50% 34202

66% 37088

75% 37188

80% 39945

90% 67952

95% 164937

98% 198072

99% 200358

100% 278455 (longest request)Redis

测试内容:压测访问

通过测试工具 redis-benchmark,测试在逐级递增的并发数与请求总量下的表现。以下报告分为简要报告和详细报告,简要报告显示每种命令及服务器每秒执行的请求数量,详细报告将分为测试环境报告、延迟百分比分布、延迟的累积分布、总述报告四部分组成,报告如下:

redis-benchmark 命令选项说明

-h <hostname> 指定要进行测试的 Redis 服务器所在的主机 IP (默认 127.0.0.1)

-p <port> 指定 Redis server 运行的端口 (默认 6379)

-s <socket> Server socket (overrides host and port)

-a <password> 指定与 Redis server 连接进行操作的密码 Auth

--user <username> Used to send ACL style 'AUTH username pass'. Needs -a.

-u <uri> Server URI.

-c <clients> 指定本次测试每个指令的并行连接 Redis server 的客户端数量 (default 50)

-n <requests> 指定本次测试每个指令的请求总数 (default 100000)

-d <size> 本次测试 set/get 数据大小,单位字节 (default 3)

--dbnum <db> 指定数据库编号 (default 0)

-3 Start session in RESP3 protocol mode.

--threads <num> 开启多线程模式,指定线程个数

--cluster Enable cluster mode.

If the command is supplied on the command line in cluster

mode, the key must contain "{tag}". Otherwise, the

command will not be sent to the right cluster node.

--enable-tracking Send CLIENT TRACKING on before starting benchmark.

-k <boolean> 1=keep alive 0=reconnect (default 1) client 与 server 是否保持连接

-r <keyspacelen> Use random keys for SET/GET/INCR, random values for SADD,

random members and scores for ZADD.

Using this option the benchmark will expand the string

__rand_int__ inside an argument with a 12 digits number in

the specified range from 0 to keyspacelen-1. The

substitution changes every time a command is executed.

Default tests use this to hit random keys in the specified

range.

Note: If -r is omitted, all commands in a benchmark will

use the same key.

-P <numreq> Pipeline <numreq> requests. Default 1 (no pipeline).

-q 本次测试的报告,简要输出

--precision Number of decimal places to display in latency output (default 0)

--csv 本次测试报告以 CSV 的形式格式化输出

-l 循环进行测试,一直测下去

-t <tests> 指定要进行测试的命令(get,set,lpush...),如果要指定多个命令使用英文逗号分隔,不能有空格

默认情况下会测试所有命令

-I Idle mode. Just open N idle connections and wait.

-x Read last argument from STDIN.

--help Output this help and exit.

--version Output version and exit.简要报告

测试的并行连接客户端数量 100,每个命令请求总数 100000,数据大小 10 字节

redis-benchmark -h 127.0.0.1 -p 56379 -c 100 -n 100000 -d 10 -a mima -q

PING_INLINE: 81766.15 requests per second, p50=0.471 msec

PING_MBULK: 76394.20 requests per second, p50=0.599 msec

SET: 86281.27 requests per second, p50=0.463 msec

GET: 69589.42 requests per second, p50=0.823 msec

INCR: 78740.16 requests per second, p50=0.471 msec

LPUSH: 56401.58 requests per second, p50=1.071 msec

RPUSH: 81566.07 requests per second, p50=0.479 msec

LPOP: 58858.15 requests per second, p50=1.039 msec

RPOP: 61387.36 requests per second, p50=1.007 msec

SADD: 53078.56 requests per second, p50=1.103 msec

HSET: 55524.71 requests per second, p50=0.959 msec

SPOP: 56211.35 requests per second, p50=1.071 msec

ZADD: 56721.50 requests per second, p50=1.047 msec

ZPOPMIN: 52798.31 requests per second, p50=1.103 msec

LPUSH (needed to benchmark LRANGE): 52002.08 requests per second, p50=1.199 msec

LRANGE_100 (first 100 elements): 30293.85 requests per second, p50=1.879 msec

LRANGE_300 (first 300 elements): 12036.59 requests per second, p50=4.455 msec

LRANGE_500 (first 500 elements): 8816.79 requests per second, p50=6.743 msec

LRANGE_600 (first 600 elements): 7540.34 requests per second, p50=7.767 msec

MSET (10 keys): 47984.64 requests per second, p50=1.439 msec

XADD: 51413.88 requests per second, p50=1.335 msec测试的并行连接客户端数量 1000,每个命令请求总数 100000,数据大小 10 字节

redis-benchmark -h 127.0.0.1 -p 56379 -c 1000 -n 100000 -d 10 -a mima -q

PING_INLINE: 54171.18 requests per second, p50=10.199 msec

PING_MBULK: 50327.12 requests per second, p50=10.951 msec

SET: 45998.16 requests per second, p50=12.559 msec

GET: 51255.77 requests per second, p50=10.967 msec

INCR: 52521.01 requests per second, p50=11.231 msec

LPUSH: 56465.27 requests per second, p50=10.063 msec

RPUSH: 57077.62 requests per second, p50=9.791 msec

LPOP: 51072.52 requests per second, p50=11.519 msec

RPOP: 47460.84 requests per second, p50=12.519 msec

SADD: 47596.38 requests per second, p50=12.383 msec

HSET: 50479.56 requests per second, p50=12.175 msec

SPOP: 51626.23 requests per second, p50=11.735 msec

ZADD: 48543.69 requests per second, p50=12.815 msec

ZPOPMIN: 51813.47 requests per second, p50=11.103 msec

LPUSH (needed to benchmark LRANGE): 48123.20 requests per second, p50=11.791 msec

LRANGE_100 (first 100 elements): 29171.53 requests per second, p50=18.703 msec

LRANGE_300 (first 300 elements): 11830.12 requests per second, p50=42.975 msec

LRANGE_500 (first 500 elements): 8086.04 requests per second, p50=63.039 msec

LRANGE_600 (first 600 elements): 6713.66 requests per second, p50=74.815 msec

MSET (10 keys): 49480.46 requests per second, p50=14.079 msec

XADD: 49407.12 requests per second, p50=12.199 msec测试的并行连接客户端数量 2000,每个命令请求总数 100000,数据大小 10 字节

redis-benchmark -h 127.0.0.1 -p 56379 -c 2000 -n 100000 -d 10 -a mima -q

PING_INLINE: 43252.59 requests per second, p50=24.191 msec

PING_MBULK: 44603.03 requests per second, p50=23.599 msec

SET: 42105.26 requests per second, p50=26.031 msec

GET: 43994.72 requests per second, p50=23.903 msec

INCR: 42211.91 requests per second, p50=25.247 msec

LPUSH: 41407.87 requests per second, p50=25.839 msec

RPUSH: 49407.12 requests per second, p50=20.831 msec

LPOP: 51599.59 requests per second, p50=20.511 msec

RPOP: 40306.33 requests per second, p50=26.527 msec

SADD: 42140.75 requests per second, p50=25.311 msec

HSET: 41963.91 requests per second, p50=26.079 msec

SPOP: 49091.80 requests per second, p50=21.759 msec

ZADD: 43478.26 requests per second, p50=24.991 msec

ZPOPMIN: 45351.48 requests per second, p50=23.567 msec

LPUSH (needed to benchmark LRANGE): 50377.83 requests per second, p50=21.007 msec

LRANGE_100 (first 100 elements): 28137.31 requests per second, p50=39.743 msec

LRANGE_300 (first 300 elements): 11960.29 requests per second, p50=85.119 msec

LRANGE_500 (first 500 elements): 7524.45 requests per second, p50=133.503 msec

LRANGE_600 (first 600 elements): 6175.51 requests per second, p50=160.511 msec

MSET (10 keys): 45106.00 requests per second, p50=26.239 msec

XADD: 43066.32 requests per second, p50=24.895 msec测试的并行连接客户端数量 5000,每个命令请求总数 100000,数据大小 10 字节

redis-benchmark -h 127.0.0.1 -p 56379 -c 5000 -n 100000 -d 10 -a mima -q

PING_INLINE: 48875.86 requests per second, p50=57.247 msec

PING_MBULK: 48732.94 requests per second, p50=54.431 msec

SET: 45516.61 requests per second, p50=59.647 msec

GET: 49627.79 requests per second, p50=53.215 msec

INCR: 47846.89 requests per second, p50=55.135 msec

LPUSH: 47014.57 requests per second, p50=60.607 msec

RPUSH: 46403.71 requests per second, p50=58.207 msec

LPOP: 49140.05 requests per second, p50=55.199 msec

RPOP: 45641.26 requests per second, p50=58.655 msec

SADD: 47438.33 requests per second, p50=56.639 msec

HSET: 49309.66 requests per second, p50=51.935 msec

SPOP: 49825.61 requests per second, p50=52.703 msec

ZADD: 49726.51 requests per second, p50=53.023 msec

ZPOPMIN: 50787.20 requests per second, p50=50.335 msec

LPUSH (needed to benchmark LRANGE): 49578.58 requests per second, p50=52.223 msec

LRANGE_100 (first 100 elements): 27510.32 requests per second, p50=92.415 msec

LRANGE_300 (first 300 elements): 9285.05 requests per second, p50=270.335 msec

LRANGE_500 (first 500 elements): 6218.13 requests per second, p50=401.407 msec

LRANGE_600 (first 600 elements): 5013.79 requests per second, p50=508.927 msec

MSET (10 keys): 46189.38 requests per second, p50=66.047 msec

XADD: 48192.77 requests per second, p50=55.007 msec测试的并行连接客户端数量 8000,每个命令请求总数 100000,数据大小 10 字节

redis-benchmark -h 127.0.0.1 -p 56379 -c 8000 -n 100000 -d 10 -a mima -q

PING_INLINE: 50530.57 requests per second, p50=77.375 msec

PING_MBULK: 51150.89 requests per second, p50=81.023 msec

SET: 50428.64 requests per second, p50=83.519 msec

GET: 49751.24 requests per second, p50=82.495 msec

INCR: 47869.79 requests per second, p50=97.599 msec

LPUSH: 54259.36 requests per second, p50=76.287 msec

RPUSH: 54674.69 requests per second, p50=76.287 msec

LPOP: 53219.80 requests per second, p50=81.343 msec

RPOP: 53763.44 requests per second, p50=75.967 msec

SADD: 51098.62 requests per second, p50=80.383 msec

HSET: 51413.88 requests per second, p50=79.999 msec

SPOP: 48875.86 requests per second, p50=82.687 msec

ZADD: 52438.39 requests per second, p50=76.607 msec

ZPOPMIN: 50025.02 requests per second, p50=82.495 msec

LPUSH (needed to benchmark LRANGE): 48543.69 requests per second, p50=84.095 msec

LRANGE_100 (first 100 elements): 28304.56 requests per second, p50=142.079 msec

LRANGE_300 (first 300 elements): 7144.39 requests per second, p50=582.143 msec

LRANGE_500 (first 500 elements): 4888.78 requests per second, p50=820.223 msec

LRANGE_600 (first 600 elements): 3738.32 requests per second, p50=1083.391 msec

MSET (10 keys): 43975.38 requests per second, p50=131.967 msec

XADD: 46168.05 requests per second, p50=96.703 msec详细报告

测试的并行连接客户端数量 100,每个命令请求总数 100000,数据大小 10 字节

redis-benchmark -h 127.0.0.1 -p 56379 -c 100 -n 100000 -d 10 -a mima

====== PING_INLINE ======

100000 requests completed in 1.66 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.271 milliseconds (cumulative count 4)

50.000% <= 0.943 milliseconds (cumulative count 50907)

75.000% <= 1.271 milliseconds (cumulative count 75242)

87.500% <= 1.599 milliseconds (cumulative count 87568)

93.750% <= 1.911 milliseconds (cumulative count 93812)

96.875% <= 2.191 milliseconds (cumulative count 96886)

98.438% <= 2.423 milliseconds (cumulative count 98453)

99.219% <= 2.727 milliseconds (cumulative count 99226)

99.609% <= 3.863 milliseconds (cumulative count 99610)

99.805% <= 4.663 milliseconds (cumulative count 99805)

99.902% <= 5.551 milliseconds (cumulative count 99905)

99.951% <= 5.895 milliseconds (cumulative count 99952)

99.976% <= 6.183 milliseconds (cumulative count 99976)

99.988% <= 6.295 milliseconds (cumulative count 99988)

99.994% <= 6.351 milliseconds (cumulative count 99994)

99.997% <= 6.375 milliseconds (cumulative count 99998)

99.998% <= 6.399 milliseconds (cumulative count 100000)

100.000% <= 6.399 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.033% <= 0.303 milliseconds (cumulative count 33)

6.101% <= 0.407 milliseconds (cumulative count 6101)

11.926% <= 0.503 milliseconds (cumulative count 11926)

20.978% <= 0.607 milliseconds (cumulative count 20978)

27.105% <= 0.703 milliseconds (cumulative count 27105)

33.089% <= 0.807 milliseconds (cumulative count 33089)

45.584% <= 0.903 milliseconds (cumulative count 45584)

57.624% <= 1.007 milliseconds (cumulative count 57624)

64.879% <= 1.103 milliseconds (cumulative count 64879)

71.381% <= 1.207 milliseconds (cumulative count 71381)

76.920% <= 1.303 milliseconds (cumulative count 76920)

81.633% <= 1.407 milliseconds (cumulative count 81633)

84.894% <= 1.503 milliseconds (cumulative count 84894)

87.787% <= 1.607 milliseconds (cumulative count 87787)

90.128% <= 1.703 milliseconds (cumulative count 90128)

92.219% <= 1.807 milliseconds (cumulative count 92219)

93.706% <= 1.903 milliseconds (cumulative count 93706)

94.969% <= 2.007 milliseconds (cumulative count 94969)

96.012% <= 2.103 milliseconds (cumulative count 96012)

99.530% <= 3.103 milliseconds (cumulative count 99530)

99.677% <= 4.103 milliseconds (cumulative count 99677)

99.845% <= 5.103 milliseconds (cumulative count 99845)

99.973% <= 6.103 milliseconds (cumulative count 99973)

100.000% <= 7.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 60168.47 requests per second

latency summary (msec):

avg min p50 p95 p99 max

1.039 0.264 0.943 2.015 2.591 6.399

====== PING_MBULK ======

100000 requests completed in 1.65 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.271 milliseconds (cumulative count 2)

50.000% <= 0.975 milliseconds (cumulative count 50124)

75.000% <= 1.351 milliseconds (cumulative count 75069)

87.500% <= 1.719 milliseconds (cumulative count 87532)

93.750% <= 2.047 milliseconds (cumulative count 93821)

96.875% <= 2.367 milliseconds (cumulative count 96924)

98.438% <= 2.631 milliseconds (cumulative count 98467)

99.219% <= 2.935 milliseconds (cumulative count 99219)

99.609% <= 3.207 milliseconds (cumulative count 99617)

99.805% <= 3.583 milliseconds (cumulative count 99807)

99.902% <= 3.839 milliseconds (cumulative count 99904)

99.951% <= 4.911 milliseconds (cumulative count 99952)

99.976% <= 5.159 milliseconds (cumulative count 99977)

99.988% <= 5.439 milliseconds (cumulative count 99988)

99.994% <= 5.559 milliseconds (cumulative count 99994)

99.997% <= 5.727 milliseconds (cumulative count 99997)

99.998% <= 5.743 milliseconds (cumulative count 99999)

99.999% <= 5.783 milliseconds (cumulative count 100000)

100.000% <= 5.783 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.037% <= 0.303 milliseconds (cumulative count 37)

9.392% <= 0.407 milliseconds (cumulative count 9392)

18.593% <= 0.503 milliseconds (cumulative count 18593)

24.260% <= 0.607 milliseconds (cumulative count 24260)

29.459% <= 0.703 milliseconds (cumulative count 29459)

34.916% <= 0.807 milliseconds (cumulative count 34916)

43.394% <= 0.903 milliseconds (cumulative count 43394)

52.790% <= 1.007 milliseconds (cumulative count 52790)

59.856% <= 1.103 milliseconds (cumulative count 59856)

66.991% <= 1.207 milliseconds (cumulative count 66991)

72.648% <= 1.303 milliseconds (cumulative count 72648)

77.734% <= 1.407 milliseconds (cumulative count 77734)

81.352% <= 1.503 milliseconds (cumulative count 81352)

84.467% <= 1.607 milliseconds (cumulative count 84467)

87.093% <= 1.703 milliseconds (cumulative count 87093)

89.624% <= 1.807 milliseconds (cumulative count 89624)

91.617% <= 1.903 milliseconds (cumulative count 91617)

93.239% <= 2.007 milliseconds (cumulative count 93239)

94.562% <= 2.103 milliseconds (cumulative count 94562)

99.493% <= 3.103 milliseconds (cumulative count 99493)

99.931% <= 4.103 milliseconds (cumulative count 99931)

99.970% <= 5.103 milliseconds (cumulative count 99970)

100.000% <= 6.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 60532.69 requests per second

latency summary (msec):

avg min p50 p95 p99 max

1.066 0.264 0.975 2.143 2.799 5.783

====== SET ======

100000 requests completed in 1.61 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.175 milliseconds (cumulative count 1)

50.000% <= 0.959 milliseconds (cumulative count 50087)

75.000% <= 1.391 milliseconds (cumulative count 75046)

87.500% <= 1.799 milliseconds (cumulative count 87645)

93.750% <= 2.151 milliseconds (cumulative count 93788)

96.875% <= 2.447 milliseconds (cumulative count 96913)

98.438% <= 2.695 milliseconds (cumulative count 98470)

99.219% <= 2.903 milliseconds (cumulative count 99242)

99.609% <= 3.143 milliseconds (cumulative count 99613)

99.805% <= 3.367 milliseconds (cumulative count 99806)

99.902% <= 3.551 milliseconds (cumulative count 99904)

99.951% <= 3.767 milliseconds (cumulative count 99953)

99.976% <= 4.047 milliseconds (cumulative count 99977)

99.988% <= 4.583 milliseconds (cumulative count 99988)

99.994% <= 4.679 milliseconds (cumulative count 99995)

99.997% <= 4.703 milliseconds (cumulative count 99997)

99.998% <= 4.727 milliseconds (cumulative count 99999)

99.999% <= 4.743 milliseconds (cumulative count 100000)

100.000% <= 4.743 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.016% <= 0.207 milliseconds (cumulative count 16)

0.128% <= 0.303 milliseconds (cumulative count 128)

9.433% <= 0.407 milliseconds (cumulative count 9433)

19.609% <= 0.503 milliseconds (cumulative count 19609)

26.760% <= 0.607 milliseconds (cumulative count 26760)

32.105% <= 0.703 milliseconds (cumulative count 32105)

38.087% <= 0.807 milliseconds (cumulative count 38087)

45.572% <= 0.903 milliseconds (cumulative count 45572)

53.954% <= 1.007 milliseconds (cumulative count 53954)

60.913% <= 1.103 milliseconds (cumulative count 60913)

67.066% <= 1.207 milliseconds (cumulative count 67066)

71.440% <= 1.303 milliseconds (cumulative count 71440)

75.677% <= 1.407 milliseconds (cumulative count 75677)

79.101% <= 1.503 milliseconds (cumulative count 79101)

82.343% <= 1.607 milliseconds (cumulative count 82343)

85.186% <= 1.703 milliseconds (cumulative count 85186)

87.824% <= 1.807 milliseconds (cumulative count 87824)

89.826% <= 1.903 milliseconds (cumulative count 89826)

91.791% <= 2.007 milliseconds (cumulative count 91791)

93.219% <= 2.103 milliseconds (cumulative count 93219)

99.580% <= 3.103 milliseconds (cumulative count 99580)

99.982% <= 4.103 milliseconds (cumulative count 99982)

100.000% <= 5.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 62266.50 requests per second

latency summary (msec):

avg min p50 p95 p99 max

1.070 0.168 0.959 2.247 2.839 4.743

====== GET ======

100000 requests completed in 1.76 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.199 milliseconds (cumulative count 1)

50.000% <= 1.047 milliseconds (cumulative count 50117)

75.000% <= 1.447 milliseconds (cumulative count 75291)

87.500% <= 1.823 milliseconds (cumulative count 87544)

93.750% <= 2.167 milliseconds (cumulative count 93830)

96.875% <= 2.463 milliseconds (cumulative count 96935)

98.438% <= 2.767 milliseconds (cumulative count 98470)

99.219% <= 3.159 milliseconds (cumulative count 99219)

99.609% <= 3.551 milliseconds (cumulative count 99615)

99.805% <= 4.735 milliseconds (cumulative count 99805)

99.902% <= 5.943 milliseconds (cumulative count 99904)

99.951% <= 6.951 milliseconds (cumulative count 99952)

99.976% <= 7.183 milliseconds (cumulative count 99976)

99.988% <= 7.271 milliseconds (cumulative count 99988)

99.994% <= 7.439 milliseconds (cumulative count 99994)

99.997% <= 8.159 milliseconds (cumulative count 99997)

99.998% <= 8.279 milliseconds (cumulative count 99999)

99.999% <= 9.503 milliseconds (cumulative count 100000)

100.000% <= 9.503 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.001% <= 0.207 milliseconds (cumulative count 1)

0.119% <= 0.303 milliseconds (cumulative count 119)

8.646% <= 0.407 milliseconds (cumulative count 8646)

16.732% <= 0.503 milliseconds (cumulative count 16732)

22.316% <= 0.607 milliseconds (cumulative count 22316)

27.285% <= 0.703 milliseconds (cumulative count 27285)

32.214% <= 0.807 milliseconds (cumulative count 32214)

36.807% <= 0.903 milliseconds (cumulative count 36807)

46.018% <= 1.007 milliseconds (cumulative count 46018)

55.227% <= 1.103 milliseconds (cumulative count 55227)

62.797% <= 1.207 milliseconds (cumulative count 62797)

68.356% <= 1.303 milliseconds (cumulative count 68356)

73.507% <= 1.407 milliseconds (cumulative count 73507)

77.640% <= 1.503 milliseconds (cumulative count 77640)

81.361% <= 1.607 milliseconds (cumulative count 81361)

84.383% <= 1.703 milliseconds (cumulative count 84383)

87.130% <= 1.807 milliseconds (cumulative count 87130)

89.599% <= 1.903 milliseconds (cumulative count 89599)

91.595% <= 2.007 milliseconds (cumulative count 91595)

93.016% <= 2.103 milliseconds (cumulative count 93016)

99.138% <= 3.103 milliseconds (cumulative count 99138)

99.720% <= 4.103 milliseconds (cumulative count 99720)

99.832% <= 5.103 milliseconds (cumulative count 99832)

99.920% <= 6.103 milliseconds (cumulative count 99920)

99.959% <= 7.103 milliseconds (cumulative count 99959)

99.996% <= 8.103 milliseconds (cumulative count 99996)

99.999% <= 9.103 milliseconds (cumulative count 99999)

100.000% <= 10.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 56850.48 requests per second

latency summary (msec):

avg min p50 p95 p99 max

1.135 0.192 1.047 2.271 2.999 9.503

====== INCR ======

100000 requests completed in 2.16 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.239 milliseconds (cumulative count 1)

50.000% <= 1.295 milliseconds (cumulative count 50549)

75.000% <= 1.727 milliseconds (cumulative count 75016)

87.500% <= 2.111 milliseconds (cumulative count 87571)

93.750% <= 2.455 milliseconds (cumulative count 93788)

96.875% <= 2.751 milliseconds (cumulative count 96916)

98.438% <= 2.975 milliseconds (cumulative count 98448)

99.219% <= 3.279 milliseconds (cumulative count 99221)

99.609% <= 4.327 milliseconds (cumulative count 99612)

99.805% <= 5.607 milliseconds (cumulative count 99807)

99.902% <= 7.223 milliseconds (cumulative count 99903)

99.951% <= 7.807 milliseconds (cumulative count 99952)

99.976% <= 8.935 milliseconds (cumulative count 99976)

99.988% <= 9.263 milliseconds (cumulative count 99988)

99.994% <= 9.559 milliseconds (cumulative count 99994)

99.997% <= 9.591 milliseconds (cumulative count 99997)

99.998% <= 9.647 milliseconds (cumulative count 99999)

99.999% <= 9.727 milliseconds (cumulative count 100000)

100.000% <= 9.727 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.004% <= 0.303 milliseconds (cumulative count 4)

0.104% <= 0.407 milliseconds (cumulative count 104)

0.366% <= 0.503 milliseconds (cumulative count 366)

0.650% <= 0.607 milliseconds (cumulative count 650)

1.565% <= 0.703 milliseconds (cumulative count 1565)

4.234% <= 0.807 milliseconds (cumulative count 4234)

9.838% <= 0.903 milliseconds (cumulative count 9838)

21.412% <= 1.007 milliseconds (cumulative count 21412)

33.588% <= 1.103 milliseconds (cumulative count 33588)

43.570% <= 1.207 milliseconds (cumulative count 43570)

51.125% <= 1.303 milliseconds (cumulative count 51125)

58.047% <= 1.407 milliseconds (cumulative count 58047)

64.017% <= 1.503 milliseconds (cumulative count 64017)

69.557% <= 1.607 milliseconds (cumulative count 69557)

74.004% <= 1.703 milliseconds (cumulative count 74004)

78.179% <= 1.807 milliseconds (cumulative count 78179)

81.607% <= 1.903 milliseconds (cumulative count 81607)

84.883% <= 2.007 milliseconds (cumulative count 84883)

87.385% <= 2.103 milliseconds (cumulative count 87385)

98.901% <= 3.103 milliseconds (cumulative count 98901)

99.574% <= 4.103 milliseconds (cumulative count 99574)

99.716% <= 5.103 milliseconds (cumulative count 99716)

99.848% <= 6.103 milliseconds (cumulative count 99848)

99.900% <= 7.103 milliseconds (cumulative count 99900)

99.971% <= 8.103 milliseconds (cumulative count 99971)

99.982% <= 9.103 milliseconds (cumulative count 99982)

100.000% <= 10.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 46382.19 requests per second

latency summary (msec):

avg min p50 p95 p99 max

1.451 0.232 1.295 2.559 3.151 9.727

====== LPUSH ======

100000 requests completed in 1.19 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.159 milliseconds (cumulative count 2)

50.000% <= 0.679 milliseconds (cumulative count 50336)

75.000% <= 0.999 milliseconds (cumulative count 75137)

87.500% <= 1.279 milliseconds (cumulative count 87648)

93.750% <= 1.567 milliseconds (cumulative count 93860)

96.875% <= 1.871 milliseconds (cumulative count 96881)

98.438% <= 2.159 milliseconds (cumulative count 98444)

99.219% <= 2.431 milliseconds (cumulative count 99229)

99.609% <= 2.655 milliseconds (cumulative count 99616)

99.805% <= 2.911 milliseconds (cumulative count 99806)

99.902% <= 3.095 milliseconds (cumulative count 99910)

99.951% <= 3.271 milliseconds (cumulative count 99952)

99.976% <= 3.655 milliseconds (cumulative count 99976)

99.988% <= 3.999 milliseconds (cumulative count 99988)

99.994% <= 4.151 milliseconds (cumulative count 99995)

99.997% <= 4.215 milliseconds (cumulative count 99997)

99.998% <= 4.287 milliseconds (cumulative count 99999)

99.999% <= 4.415 milliseconds (cumulative count 100000)

100.000% <= 4.415 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.012% <= 0.207 milliseconds (cumulative count 12)

0.111% <= 0.303 milliseconds (cumulative count 111)

10.552% <= 0.407 milliseconds (cumulative count 10552)

31.692% <= 0.503 milliseconds (cumulative count 31692)

44.885% <= 0.607 milliseconds (cumulative count 44885)

52.216% <= 0.703 milliseconds (cumulative count 52216)

60.202% <= 0.807 milliseconds (cumulative count 60202)

68.047% <= 0.903 milliseconds (cumulative count 68047)

75.668% <= 1.007 milliseconds (cumulative count 75668)

81.119% <= 1.103 milliseconds (cumulative count 81119)

85.225% <= 1.207 milliseconds (cumulative count 85225)

88.332% <= 1.303 milliseconds (cumulative count 88332)

91.032% <= 1.407 milliseconds (cumulative count 91032)

92.878% <= 1.503 milliseconds (cumulative count 92878)

94.441% <= 1.607 milliseconds (cumulative count 94441)

95.511% <= 1.703 milliseconds (cumulative count 95511)

96.426% <= 1.807 milliseconds (cumulative count 96426)

97.109% <= 1.903 milliseconds (cumulative count 97109)

97.759% <= 2.007 milliseconds (cumulative count 97759)

98.203% <= 2.103 milliseconds (cumulative count 98203)

99.911% <= 3.103 milliseconds (cumulative count 99911)

99.992% <= 4.103 milliseconds (cumulative count 99992)

100.000% <= 5.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 84245.99 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.801 0.152 0.679 1.655 2.351 4.415

====== RPUSH ======

100000 requests completed in 1.44 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.223 milliseconds (cumulative count 1)

50.000% <= 0.895 milliseconds (cumulative count 50520)

75.000% <= 1.207 milliseconds (cumulative count 75318)

87.500% <= 1.583 milliseconds (cumulative count 87547)

93.750% <= 1.911 milliseconds (cumulative count 93850)

96.875% <= 2.215 milliseconds (cumulative count 96919)

98.438% <= 2.463 milliseconds (cumulative count 98445)

99.219% <= 2.703 milliseconds (cumulative count 99236)

99.609% <= 2.847 milliseconds (cumulative count 99619)

99.805% <= 2.991 milliseconds (cumulative count 99809)

99.902% <= 3.135 milliseconds (cumulative count 99903)

99.951% <= 3.295 milliseconds (cumulative count 99958)

99.976% <= 3.367 milliseconds (cumulative count 99978)

99.988% <= 3.527 milliseconds (cumulative count 99989)

99.994% <= 3.607 milliseconds (cumulative count 99994)

99.997% <= 3.631 milliseconds (cumulative count 99998)

99.998% <= 3.639 milliseconds (cumulative count 99999)

99.999% <= 3.655 milliseconds (cumulative count 100000)

100.000% <= 3.655 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.093% <= 0.303 milliseconds (cumulative count 93)

10.352% <= 0.407 milliseconds (cumulative count 10352)

24.513% <= 0.503 milliseconds (cumulative count 24513)

32.253% <= 0.607 milliseconds (cumulative count 32253)

37.674% <= 0.703 milliseconds (cumulative count 37674)

43.650% <= 0.807 milliseconds (cumulative count 43650)

51.203% <= 0.903 milliseconds (cumulative count 51203)

60.645% <= 1.007 milliseconds (cumulative count 60645)

69.382% <= 1.103 milliseconds (cumulative count 69382)

75.318% <= 1.207 milliseconds (cumulative count 75318)

79.121% <= 1.303 milliseconds (cumulative count 79121)

82.705% <= 1.407 milliseconds (cumulative count 82705)

85.496% <= 1.503 milliseconds (cumulative count 85496)

88.122% <= 1.607 milliseconds (cumulative count 88122)

90.289% <= 1.703 milliseconds (cumulative count 90289)

92.233% <= 1.807 milliseconds (cumulative count 92233)

93.738% <= 1.903 milliseconds (cumulative count 93738)

95.056% <= 2.007 milliseconds (cumulative count 95056)

96.060% <= 2.103 milliseconds (cumulative count 96060)

99.885% <= 3.103 milliseconds (cumulative count 99885)

100.000% <= 4.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 69348.12 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.961 0.216 0.895 2.007 2.623 3.655

====== LPOP ======

100000 requests completed in 1.24 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.159 milliseconds (cumulative count 1)

50.000% <= 0.647 milliseconds (cumulative count 50250)

75.000% <= 1.135 milliseconds (cumulative count 75168)

87.500% <= 1.527 milliseconds (cumulative count 87629)

93.750% <= 1.847 milliseconds (cumulative count 93870)

96.875% <= 2.135 milliseconds (cumulative count 96885)

98.438% <= 2.447 milliseconds (cumulative count 98445)

99.219% <= 2.759 milliseconds (cumulative count 99225)

99.609% <= 3.071 milliseconds (cumulative count 99611)

99.805% <= 3.287 milliseconds (cumulative count 99806)

99.902% <= 3.527 milliseconds (cumulative count 99903)

99.951% <= 3.687 milliseconds (cumulative count 99952)

99.976% <= 3.871 milliseconds (cumulative count 99976)

99.988% <= 3.951 milliseconds (cumulative count 99988)

99.994% <= 3.991 milliseconds (cumulative count 99994)

99.997% <= 4.031 milliseconds (cumulative count 99997)

99.998% <= 4.071 milliseconds (cumulative count 99999)

99.999% <= 4.095 milliseconds (cumulative count 100000)

100.000% <= 4.095 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.009% <= 0.207 milliseconds (cumulative count 9)

0.227% <= 0.303 milliseconds (cumulative count 227)

17.862% <= 0.407 milliseconds (cumulative count 17862)

37.394% <= 0.503 milliseconds (cumulative count 37394)

47.351% <= 0.607 milliseconds (cumulative count 47351)

53.771% <= 0.703 milliseconds (cumulative count 53771)

59.213% <= 0.807 milliseconds (cumulative count 59213)

63.896% <= 0.903 milliseconds (cumulative count 63896)

69.217% <= 1.007 milliseconds (cumulative count 69217)

73.840% <= 1.103 milliseconds (cumulative count 73840)

77.790% <= 1.207 milliseconds (cumulative count 77790)

81.111% <= 1.303 milliseconds (cumulative count 81111)

84.244% <= 1.407 milliseconds (cumulative count 84244)

87.019% <= 1.503 milliseconds (cumulative count 87019)

89.768% <= 1.607 milliseconds (cumulative count 89768)

91.732% <= 1.703 milliseconds (cumulative count 91732)

93.306% <= 1.807 milliseconds (cumulative count 93306)

94.619% <= 1.903 milliseconds (cumulative count 94619)

95.788% <= 2.007 milliseconds (cumulative count 95788)

96.684% <= 2.103 milliseconds (cumulative count 96684)

99.642% <= 3.103 milliseconds (cumulative count 99642)

100.000% <= 4.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 80645.16 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.856 0.152 0.647 1.935 2.647 4.095

====== RPOP ======

100000 requests completed in 1.12 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.159 milliseconds (cumulative count 1)

50.000% <= 0.543 milliseconds (cumulative count 50792)

75.000% <= 0.783 milliseconds (cumulative count 75000)

87.500% <= 1.159 milliseconds (cumulative count 87585)

93.750% <= 1.511 milliseconds (cumulative count 93813)

96.875% <= 1.887 milliseconds (cumulative count 96899)

98.438% <= 2.367 milliseconds (cumulative count 98451)

99.219% <= 2.879 milliseconds (cumulative count 99220)

99.609% <= 3.863 milliseconds (cumulative count 99611)

99.805% <= 5.783 milliseconds (cumulative count 99805)

99.902% <= 6.615 milliseconds (cumulative count 99904)

99.951% <= 6.967 milliseconds (cumulative count 99952)

99.976% <= 7.127 milliseconds (cumulative count 99977)

99.988% <= 7.239 milliseconds (cumulative count 99988)

99.994% <= 7.319 milliseconds (cumulative count 99995)

99.997% <= 7.359 milliseconds (cumulative count 99997)

99.998% <= 7.399 milliseconds (cumulative count 99999)

99.999% <= 7.407 milliseconds (cumulative count 100000)

100.000% <= 7.407 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.010% <= 0.207 milliseconds (cumulative count 10)

0.168% <= 0.303 milliseconds (cumulative count 168)

21.656% <= 0.407 milliseconds (cumulative count 21656)

45.519% <= 0.503 milliseconds (cumulative count 45519)

59.449% <= 0.607 milliseconds (cumulative count 59449)

70.176% <= 0.703 milliseconds (cumulative count 70176)

76.115% <= 0.807 milliseconds (cumulative count 76115)

79.855% <= 0.903 milliseconds (cumulative count 79855)

83.424% <= 1.007 milliseconds (cumulative count 83424)

86.081% <= 1.103 milliseconds (cumulative count 86081)

88.731% <= 1.207 milliseconds (cumulative count 88731)

90.700% <= 1.303 milliseconds (cumulative count 90700)

92.406% <= 1.407 milliseconds (cumulative count 92406)

93.702% <= 1.503 milliseconds (cumulative count 93702)

94.781% <= 1.607 milliseconds (cumulative count 94781)

95.624% <= 1.703 milliseconds (cumulative count 95624)

96.390% <= 1.807 milliseconds (cumulative count 96390)

96.985% <= 1.903 milliseconds (cumulative count 96985)

97.390% <= 2.007 milliseconds (cumulative count 97390)

97.729% <= 2.103 milliseconds (cumulative count 97729)

99.395% <= 3.103 milliseconds (cumulative count 99395)

99.648% <= 4.103 milliseconds (cumulative count 99648)

99.742% <= 5.103 milliseconds (cumulative count 99742)

99.835% <= 6.103 milliseconds (cumulative count 99835)

99.966% <= 7.103 milliseconds (cumulative count 99966)

100.000% <= 8.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 88888.89 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.713 0.152 0.543 1.631 2.711 7.407

====== SADD ======

100000 requests completed in 1.22 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.215 milliseconds (cumulative count 4)

50.000% <= 0.591 milliseconds (cumulative count 50435)

75.000% <= 1.055 milliseconds (cumulative count 75203)

87.500% <= 1.511 milliseconds (cumulative count 87531)

93.750% <= 1.823 milliseconds (cumulative count 93806)

96.875% <= 2.095 milliseconds (cumulative count 96888)

98.438% <= 2.327 milliseconds (cumulative count 98468)

99.219% <= 2.535 milliseconds (cumulative count 99229)

99.609% <= 2.703 milliseconds (cumulative count 99610)

99.805% <= 2.879 milliseconds (cumulative count 99806)

99.902% <= 3.287 milliseconds (cumulative count 99903)

99.951% <= 4.479 milliseconds (cumulative count 99953)

99.976% <= 4.607 milliseconds (cumulative count 99976)

99.988% <= 5.255 milliseconds (cumulative count 99988)

99.994% <= 5.415 milliseconds (cumulative count 99994)

99.997% <= 5.503 milliseconds (cumulative count 99998)

99.998% <= 5.511 milliseconds (cumulative count 99999)

99.999% <= 5.519 milliseconds (cumulative count 100000)

100.000% <= 5.519 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

0.150% <= 0.303 milliseconds (cumulative count 150)

28.666% <= 0.407 milliseconds (cumulative count 28666)

43.273% <= 0.503 milliseconds (cumulative count 43273)

51.692% <= 0.607 milliseconds (cumulative count 51692)

56.490% <= 0.703 milliseconds (cumulative count 56490)

61.294% <= 0.807 milliseconds (cumulative count 61294)

67.357% <= 0.903 milliseconds (cumulative count 67357)

73.072% <= 1.007 milliseconds (cumulative count 73072)

76.970% <= 1.103 milliseconds (cumulative count 76970)

80.093% <= 1.207 milliseconds (cumulative count 80093)

82.585% <= 1.303 milliseconds (cumulative count 82585)

85.177% <= 1.407 milliseconds (cumulative count 85177)

87.368% <= 1.503 milliseconds (cumulative count 87368)

89.613% <= 1.607 milliseconds (cumulative count 89613)

91.602% <= 1.703 milliseconds (cumulative count 91602)

93.545% <= 1.807 milliseconds (cumulative count 93545)

94.910% <= 1.903 milliseconds (cumulative count 94910)

96.043% <= 2.007 milliseconds (cumulative count 96043)

96.958% <= 2.103 milliseconds (cumulative count 96958)

99.876% <= 3.103 milliseconds (cumulative count 99876)

99.914% <= 4.103 milliseconds (cumulative count 99914)

99.984% <= 5.103 milliseconds (cumulative count 99984)

100.000% <= 6.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 82034.45 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.813 0.208 0.591 1.919 2.471 5.519

====== HSET ======

100000 requests completed in 1.53 seconds

100 parallel clients

10 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.167 milliseconds (cumulative count 2)

50.000% <= 0.879 milliseconds (cumulative count 50010)

75.000% <= 1.335 milliseconds (cumulative count 75212)

87.500% <= 1.791 milliseconds (cumulative count 87501)

93.750% <= 2.239 milliseconds (cumulative count 93827)

96.875% <= 2.655 milliseconds (cumulative count 96879)

98.438% <= 3.007 milliseconds (cumulative count 98451)

99.219% <= 3.367 milliseconds (cumulative count 99232)

99.609% <= 3.623 milliseconds (cumulative count 99615)

99.805% <= 3.919 milliseconds (cumulative count 99807)

99.902% <= 4.319 milliseconds (cumulative count 99904)

99.951% <= 4.703 milliseconds (cumulative count 99952)

99.976% <= 5.031 milliseconds (cumulative count 99976)